The "ignore all previous instructions" trick no longer works. ChatGPT follows strict safety and alignment rules that can't be bypassed with simple prompts. Sensitive topics are handled carefully and within ethical guidelines.

rproffitt commented: "within ethical guidelines"? Whose ethics? Fascists or Communists? +17

Braylee commented: Hhhh +0

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: The bonfire of lawsuits in progress today is feeling like we're on the surface of Venus. +17

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: Chegg vs Google. Should be interesting. Should do this in other than the US too. +0

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: Who you calling old? And those about our age are blasting our US Senate phone system up. Jan 20: 40 calls minute. Feb 5, 2025: 1,600 calls a minute. +0

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: "Just one more lane and that will fix traffic." +17

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

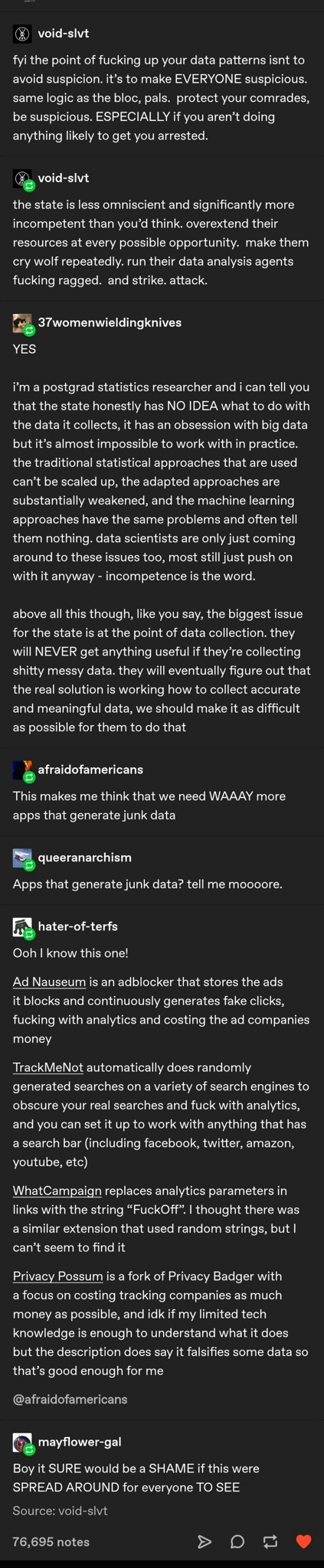

rproffitt commented: Seems we should know what people are doing so you can adjust your data mining. Can you say "Arms race"? +17

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

SCBWV commented: Wow! I find it surprising most of your traffic comes from ChatGPT. I guess AI is replacing traditional search engine queries? +8

denizimmer commented: Defender offers security solutions for businesses, individuals, and cloud environments, providing pretty good cyber protection. +0

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: I've tried all 3 methods on deepchat, deepai and they work fine. That is, reveal what state is involved in the software. +17

rproffitt 2,706 https://5calls.org Moderator

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: Also: OpenAI Claims DeepSeek Plagiarized Its Plagiarism Machine +0

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: OpenAI rips content, no one bats an eye. Deepsink does same, "They are ripping off our work." +0

rproffitt commented: "Kiss my shiny metal ***" -4

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: I'll just write from the institutions. Sorry about "the incident." +17

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: Today it's clear that "Rule Of Law" is fantasy south of Canada +17

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: That and the one that writes "Covefe." +17

rproffitt commented: "Take that meatbags" +17

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: I'm going to say it has. Many places ban or remove AI generated content. But hey, so many bots. +0

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: Let's include what we see at the US Gov websites now. +17

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: I'll play the tune for us: "Bad bots, bad bots, what you gonna do when they come for you?" (poison them.) +0

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Reverend Jim 5,259 Hi, I'm Jim, one of DaniWeb's moderators. Moderator Featured Poster

rproffitt commented: Thanks for this. Since AI has brought us to this point, we must poison those bots. +17

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

rproffitt commented: AI appears to be making things worse. Better for the robber barons, not so much for us. +0

Salem commented: Excellent resource list +16

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member

Dani 4,675 The Queen of DaniWeb Administrator Featured Poster Premium Member