Roots of quadratic equcation

#include<iostream.h>

#include<conio.h>

#include<math.h>

void main()

{

float a,b,c,disc,root1,root2;

cout<<"enter a"<<endl;

cin>>a;

cout<<"enter b"<<endl;

cin>>b;

cout<<"enter c"<<endl;

cin>>c;

disc=(b*b)-(4*a*c);

root1=(-b+sqrt(disc))/(2*a);

root2=(-b-sqrt(disc))/(2*a);

cout<<"root1"<<root1<<endl;

cout<<"root2"<<root2<<endl;

getch();

}

any question or assignment ask to me!guys

asifalizaman

1

Junior Poster

Recommended Answers

Jump to PostDo you have a specific question?

Otherwise, here are a few remarks on your code:

- You are using pre-standard headers like

<iostream.h>and<math.h>. These shouldn't be used today (as in, not since 15 years ago). You should use<iostream>and<cmath>(all C++ standard headers don't have the.h, …

Jump to PostAnd if you want to debate the issue, take it up with Herb Sutter, Andrei Alexandrescu and Bjarne Stroustrup.

This reeks of appeal to authority; if you have an argument to make, make it. I've already read anything you're likely to be thinking of from those authors, and …

All 8 Replies

mike_2000_17

2,669

21st Century Viking

Team Colleague

Featured Poster

Do you have a specific question?

Otherwise, here are a few remarks on your code:

- You are using pre-standard headers like

<iostream.h>and<math.h>. These shouldn't be used today (as in, not since 15 years ago). You should use<iostream>and<cmath>(all C++ standard headers don't have the.h, and all those taken from C (like math.h, stdlib.h, stdio.h, etc.) also omit the.hand are prefixed with the letter c). - The

conio.hheader is not standard and is certainly not guaranteed to be supported anywhere, although some popular C++ compilers in Windows do support it still today. There is no reason to use it here. - Since standardization, all standard library elements (classes, functions, objects ..) are in the

stdnamespace. This means that accessingcoutfor example should be done by either writingstd::couteverywhere you use it, or writing the lineusing std::cout;in the scope in which you are going to use it, or writing the lineusing namespace std;in the scope in which you are usingcoutand other standard library components. - The main() function is required to return an integer value. The two acceptable, standard forms for the main() function is either

int main() { /* ..*/ };orint main(int argc, char** argv) { /* .. */ };. The return value should be 0 if the program executed correctly, and something else otherwise. - Your formatting of the code is quite ugly. Indentation is important, and spacing out the code is too. In other words, you must make an effort to make your code more clear and readable.

- You should declare your variables as close as possible to where you first use them, and don't create variables with uninitialized values, these are both bad habits to have and make for error-prone code. Some 30 years ago, it used to be required to declare all variables at the start of the function, it is now an antiquated practice and considered poor style and error-prone.

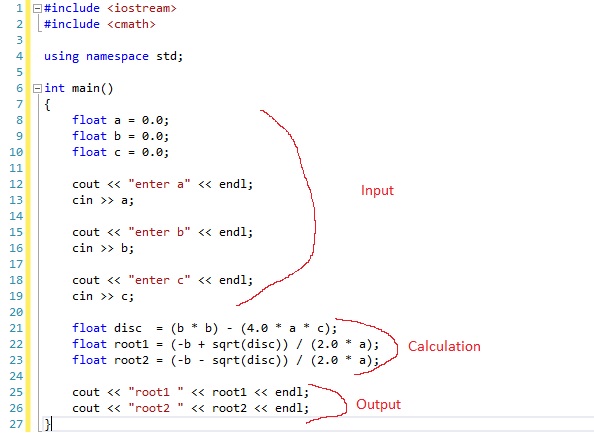

With all this considered, here is a more modern and standard compliant version of your code:

// Roots of quadratic equation

#include <iostream>

#include <cmath>

using namespace std;

int main()

{

cout << "enter a" << endl;

float a = 0.0;

cin >> a;

cout << "enter b" << endl;

float b = 0.0;

cin >> b;

cout << "enter c" << endl;

float c = 0.0;

cin >> c;

float disc = (b * b) - (4.0 * a * c);

float root1 = (-b + sqrt(disc)) / (2.0 * a);

float root2 = (-b - sqrt(disc)) / (2.0 * a);

cout << "root1 " << root1 << endl;

cout << "root2 " << root2 << endl;

cin.ignore(); // This replaces

cin.get(); // getch(); (not exactly, but it's good enough)

return 0;

}

Also, note that your code is missing a check for a division by zero (if a is near zero) and a check for complex roots (if the discriminant is negative), both of which will cause a NaN value as a result (NaN means "Not a Number"). Not to mention the problem of the user entering things other than numbers (although that is generally not a part of a basic assignment problem like this one).

deceptikon

commented:

Nice.

+12

deceptikon

1,790

Code Sniper

Team Colleague

Featured Poster

You should use <iostream> and <cmath> (all C++ standard headers don't have the .h, and all those taken from C (like math.h, stdlib.h, stdio.h, etc.) also omit the .h and are prefixed with the letter c).

It's also important to remember that some compilers (Microsoft's in particular) "helpfully" don't require std qualification on a large portion of the C library. But if you rely on that your code will not be portable and will fail to compile on other compilers without a using directive. But a using directive isn't generally considered to be a good practice with new code because it defeats the purpose of namespaces.

The return value should be 0 if the program executed correctly, and something else otherwise.

The final nail in the coffin for void main() was standard C++'s feature that allows you to omit the return statement and a return value of 0 will be assumed. This only works for the main() function, but it means you can do this:

int main()

{

}

And the code is perfectly legal and portable across the board. It even saves you a keystroke over void main(), so there are no excuses anymore.

You should declare your variables as close as possible to where you first use them

That's debatable. I understand the intention, but if a function is large enough that declaring variables at the top of each scope can be described as error prone, you should probably do a few iterations of refactoring.

don't create variables with uninitialized values

Also debatable. Once again, I understand the intention. But a decent programmer shouldn't fall prey to uninitialized variables even without the warnings that any decent compiler will throw. And if you do fall prey to them and ignore the warnings, I can all but guarantee you've got bigger problems than uninitialized variables.

Some 30 years ago, it used to be required to declare all variables at the start of the function

The rule was actually declarations had to come before the first executable statement in that scope. Which means you can define variables local to any compound statement and organize your code quite neatly that way. For example, prior to the for loop allowing declarations in the initialization clause, the trick to achieve the same behavior was to wrap the whole thing in a compound statement:

{

int i;

for (i = 0; i < n; i++) {

...

}

}

it is now an antiquated practice and considered poor style and error-prone.

You keep using that word, I do not think means what you think it means. ;) "Antiquated" isn't synonymous with "bad", you're incorrect that it's universally considered poor style, and I'm having trouble thinking of ways that it would be error prone.

asifalizaman

1

Junior Poster

nice dear i apricate youre knowledge thanks

mike_2000_17

2,669

21st Century Viking

Team Colleague

Featured Poster

declare your variables as close as possible to where you first use them

That's debatable.

Well, I don't mean that you should give the death penalty to those who don't follow that guideline. It's a very minor offense to good style. In other words, it's good to foster the habit of declaring variables as locally as possible, but I don't mean that you should go back and refactor all your code to bring it on par with that guideline.

And if you want to debate the issue, take it up with Herb Sutter, Andrei Alexandrescu and Bjarne Stroustrup. Not that I wouldn't have arguments of my own on that subject, but they've made better cases for this guideline in the past than I could.

don't create variables with uninitialized values

Also debatable.

Ditto.

"Antiquated" isn't synonymous with "bad",

Yeah, that's exactly why I used the word "antiquated" and not the word "bad". I meant antiquated in the sense that it is a relic of the past, an outdated practice. I guess you could say it's one kind of "bad", but I prefer the more precise term "antiquated" because it conveys what I mean. If you want to argue that antiquated isn't necessarily bad, well, maybe not always, but I didn't say "bad", so you're barking at the wrong tree.

It's an outdated practice because it came from the earlier days of programming when stack-growth limitations (i.e., when they can occur) were sort of built-in (I don't really know why). Early languages all had this limitation, e.g., C, Pascal, Fortran77, etc., where all variables of a function had to be listed either before the first executable statement (C or Fortran) or in a separate section (Pascal). There hasn't been a practical reason (if ever) for this requirement in nearly 30 years, as far as I know. It's a practice that still lingers from legacy libraries, old-school programmers, and the occasional young programmer who picked up the habit from some dark place (e.g., bad/old textbook or old instructor). If the word "antiquated" is not adequate, I don't know what is.

you're incorrect that it's universally considered poor style,

"Universally" contains a lot of people ;) I can't speak for all of them. I've certainly never heard people advocate for declaring all variables at the start (and leaving them uninitialized). But I guess there are many people in the "I don't care" or "never thought about it" camp. But when it is brought up, most (if not all) of those would lean on the side of "it's probably better to declare variable where they are first used / initialized". And on top of that, it is more convenient when you need a new variable to just declare it on-site, as opposed to moving back to the start to add it to the list, and that's why most people program that way without effort or special attention to the issue. I think you would be hard-pressed to find good quality code (i.e., programmed by an experienced programmer) that was written 15 years ago or later that has this kind of "antiquated" style. I've dealt with some old libraries, and I can't recall seeing this in production code dating later than the early 90s. But, of course, anything from the 80s or earlier is riddled with this style, and that particular issue (along with goto's and short one-letter names for variables) is a major source of frustration for those who sometimes dig up dusty old code like that.

and I'm having trouble thinking of ways that it would be error prone.

Ohh.. where to begin. I think you and I have very different experiences in that regard, and I think it depends quite a bit on the application domain. When doing numerical analysis, matrix numerical methods, control systems, and lots of other heavily mathematical code, functions can often become large and cumbersome and there isn't much that can be done about it, i.e., long and complicated equations / numerical-calculations turn into long and complicated functions, and there often isn't even a practical way to split them up just for the sake of splitting them up. Here's a simple example of that. So that's that for the "write smaller functions" guideline (which I agree with, when possible). But, of course, if most functions can be kept within manageable sizes (i.e. below 50 lines or so), then it doesn't matter much where you declare variables, but the point of nuturing good coding habits is not for the purpose of your everyday trivial coding, most of the time they are inconsequential habits, but sometimes they are life-savers and that's where the habits pay off. That's why they're always important, a habit that you save up for the harder tasks only is a habit you quickly forget.

Then, consider terrors like this example more or less picked at random (you can always depend on good old Fortran code to send chills down your spine at the thought of having to understand, translate or debug such code, all things I've had to do on very similar code). Translating or trying to understand such fortran code is a three step process: remove the goto's (another "antiquated" practice); bring the declaration of variables to their usage scope; and, rename the variables as you figure out what they mean. Step (2) is of critical importance. It's all about being able to manage/understand the states of variables being used and how they are changed through the function, reducing the scopes of variables to a minimum is very beneficial for that and that leads to a better understanding of the code, and thus, fewer mistakes when writing / maintaining / changing / debugging it.

Another reason (beyond clarity) why declaring all variables at the start (often uninitialized) is error-prone is because it encourages the much nastier practice of re-using variables for multiple purposes. That practice is not only bad for clarity (since you can't give variables specific and meaningful names, and that it obscurs the relevant states of the function) but it can lead to nasty bugs if you inadvertedly move some code around, changing the behavior due to interference between the multiple purposes of the concerned variables. There are countless other similar pitfalls when doing this. For example, I once saw code in which a global static array was used for some intermediate results at two different places in a function, then someone saw that even though it had 8 elements, only 7 were needed (in the first and only obvious place it was being used!), so the static array's size was reduced as part of a maintenance "fix". As the bug (when read/writing the 8th element) caused only some corruption of a rarely used variable, it went silent, but later broke the robot being controlled by that program, costing roughly 15k$ in replacement hardware + labor and a month-long headache.

Also, code restructuring / refactoring can be made difficult and error-prone when unnecessarily wide-scoped (or multi-purpose) variables appear in functions or elsewhere. That's a pretty obvious one.

I think that sums up the main problems with that, which are each pretty significant pitfalls beyond the trivial "oops, I forgot to initialize that value" (which also occurs more often than you'd think). I'm sure I could come up with many more.

But a decent programmer shouldn't fall prey to uninitialized variables even without the warnings that any decent compiler will throw.

Decent programmers don't fall prey to these kinds of problems because they have good coding habits that save them from 99% of the pitfalls novice programmers often find themselves falling for. Being a good programmer is not about being "bug-proof", but about never forgetting that you're not.

deceptikon

1,790

Code Sniper

Team Colleague

Featured Poster

And if you want to debate the issue, take it up with Herb Sutter, Andrei Alexandrescu and Bjarne Stroustrup.

This reeks of appeal to authority; if you have an argument to make, make it. I've already read anything you're likely to be thinking of from those authors, and it didn't change my mind.

I don't disagree that declaring variables reasonably close to the beginning of their logical lifetime is a good idea. I do disagree that variables should always be declared immediately before their first use, which is what it seems like you're advocating. The former when used judiciously can greatly enhance code clarity compared to always declaring variables at the top of the function (the other extreme, which I'll mention again I'm not advocating), but the latter can make code harder to read and understand by forcing you to switch between declaration and execution mindsets.

Let's take your replacement for the original code and reorganize a few declarations to make it read better (in my opinion). There are three general chunks of related code: the input of operands, the calculation, and output of the result:

It's obvious that a, b, c are related, so lumping them together makes more sense than interspersing them between the input statements. Technically they can be viewed as having a lifetime of the entire function, and therefore it makes sense to put them at the top.

The calculation is a separate logical unit, but still uses those three variables. disc, root1, and root2 only have a necessary lifetime of the latter half of the function and the declarations can be deferred until that time (but they're still grouped because the variables are directly related). However, given the simplicity of this function, I see no problem at all with even the extreme of declaring all variables at the top even though I'd probably lean more toward what you see in the image assuming only reorganization of lines.

Yeah, that's exactly why I used the word "antiquated" and not the word "bad".

Lumping "antiquated" with "poor style" and "error-prone" very clearly puts it in the context of "bad".

functions can often become large and cumbersome and there isn't much that can be done about it

That's a different issue entirely. When you have a large amount of code that cannot be refactored, steps must be taken to organize it so that it's internally coherent. However, that doesn't mean the same steps are equally valuable when you have smaller functions or the option of refactoring.

I think that sums up the main problems with that

Pretty much all of your listed problems stem from the code being poorly written in general or niche areas that are clear exceptions that defy general good style in the first place. I'm thinking more of examples of otherwise good code in reasonably sized functions where having all of the variables at the top of the scope for their lifetime (not necessarily at the top of the function) suddenly makes the code error prone.

If you'll humor me in showing off a little C code where I didn't have the option of interspersing declarations and executable statements, you'll be able to see what I mean by declaring variables at the top of the scope of their logical lifetime. I think this is a good style in general.

Would I change the code if given the ability to put a declaration anywhere? I might change line 268 to be a declaration, depending on my mood at the time. Or I might leave it the way it is to be consistent with line 330. But the point is that wouldn't change my assessment of the code's stylistic quality.

mike_2000_17

2,669

21st Century Viking

Team Colleague

Featured Poster

I think we largely agree on this topic. When I look at your example C code, I see a generalized effort to keep variables as locally scoped as possible, within bounds of reason. It's style is in complete agreement with the guideline, at least, the way I see it, which I think is essentially the same as you. Code like the one you linked to (formatted I/O, parsing, etc.) does require things like tentative variables, flags, lots of switch-case style coding, etc. In this realm, the paradigm of "here are the variables I'm playing with, and here is the code" is a natural one, but even then, you still show evidence of trying to limit the scope, which just proves my point.

I don't know what you think I mean by declaring variables where they are first needed. That C code obeys that guideline, in my book. What I see are things like:

- Declare a few variables that will store the results of a loop.

- Run the loop (with locally declared variables that are needed only per-iteration).

- Inspect / return the result based on those variables.

So, those variables that appear at the start of the loops (which I presume is what you are pointing to, since all other variables in that code are declared directly on-site of where they are needed) are being declared and used at exactly the time and scope at which they are needed.

I'm not advocating for an obsessive compulsion to declare variables at the narrowest scope that is humanly possible, as you seem to imply that I do. About lines 268 and 330, I consider that round-off error, it's not what I would have naturally done (as a force of habit) if I were the author of the code, but it's inconsequential. As I said earlier, good habits are largely inconsequential for trivial everyday coding, and that's a good example of that. My usual habits of coding would probably have yielded something like having line 330 be a declaration-initialization line, having line 327 be a declaration-initialization just before the start of the loop (348), and lines 323-327 would also probably be stuck a few lines down (closer to the loop). But when we're talking good programming practice or good coding habits, it's hard to draw any real distinction between good and better when discussing trivial little examples like those. I know what I naturally would produce as code and I know of good reasons for having developed those habits, and I've certainly reaped the benefits of them, but when discussing these examples, like the OP's code too, it looks like we're just splitting hairs. As for the correction to the OP's code (declaring a,b,c at the top or just before each input), to me, that's tom"Eh"to / tom"Ah"to, the more important part was the three calculated variables, which you left declared on-site, I wonder why...

This simple and very common example of doing a typical for-loop but needing the index at which the loop was broken is a good little example. When writing a typical for-loop, I would, by default, declare the index/iterator in the for-statement, and if I then realize I need it after the loop (to know where it ended), I just take the declaration out to the previous line just before the for-statement. A try-block is another typical example like that. The point is, my default habit is to declare when first initialized, and then move the variables up the scopes as required by the logic of the code itself. That generally puts them on the line just before entering the scope, which pretty clearly conveys the message that these are variables needed or set by the scope you are about to enter, but whose values you want to retain coming out of the scope (e.g., results, flags, etc.).

I doubt that many programmers work with the opposite logic, i.e., declare variables at the start of the function in one massive list, and then, possibly break up that list into smaller lists at the beginning of sub-scopes when the function-wide list becomes too bloated. That just doesn't make any sense to me. The "declare when initialized and move up if needed" logic is much more sound because it is driven by the requirements of the code itself and it also has the beneficial effect of making you realize what you are doing (by having to move up a declaration) and possibly how you could improve the code or move it to a separate function. The "create a big list of variables at the top and worry about contextual interferences later" strategy has no such merit, it becomes highly dependent on the author's own threshold or preferences for contextual clarity and code bloat, and very often what you see is that to the original author, the code was very clear at the time (because he was in the midst of writing it!), but the result is gibberish. With the "declare local and move up" strategy, it is one of the easiest things you can do to shield yourself (in part) against that problem.

deceptikon

1,790

Code Sniper

Team Colleague

Featured Poster

I'm not advocating for an obsessive compulsion to declare variables at the narrowest scope that is humanly possible, as you seem to imply that I do.

That's what I inferred from your example, which is what I believe to be excessive localization of declarations:

cout << "enter a" << endl;

float a = 0.0;

cin >> a;

cout << "enter b" << endl;

float b = 0.0;

cin >> b;

cout << "enter c" << endl;

float c = 0.0;

cin >> c;

The reason I brought it up is because you were offerring it as a superior example to a beginner, who might take that kind of thing to heart and go overboard with it. So I felt a tempering perspective was warranted.

asifalizaman

1

Junior Poster

yes a,b,c may be float data type because it may be contain float value like 2.1,0.5,o.7 or other ..you guys right thanks for sharing good information with the ohters thanks

Be a part of the DaniWeb community

We're a friendly, industry-focused community of developers, IT pros, digital marketers, and technology enthusiasts meeting, networking, learning, and sharing knowledge.