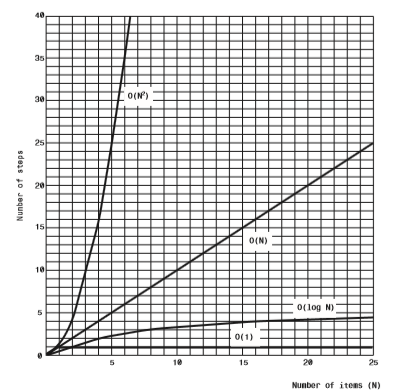

Doing some revision for a university exam being sat at the end of the week. Got my head around most of the BigO stuff I think apart from calculating the BigO equations like the below.

T(n) = 1000040

T(n) = 45n^2 + 3n/1000 + 18(log n)

Could anyone explain the logic behind how would come to an answer for these?