shader.vert:

#version 120

varying vec3 position;

varying vec3 normal;

void main()

{

position = (vec3(gl_ModelViewMatrix*gl_Vertex)); //get the position of the vertex after translation, rotation, scaling

normal = gl_NormalMatrix*gl_Normal; //get the normal direction, after translation, rotation, scaling

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

shader.frag:

#version 120

varying vec3 position;

varying vec3 normal;

uniform vec3 lightColor;

uniform vec3 surfaceColor;

uniform float ambientScale;

void main()

{

vec3 lightAmbient = lightColor * ambientScale;

vec3 surfaceAmbient = surfaceColor;

vec3 ambient = lightAmbient * surfaceAmbient;

//vec3 lightDirection = normalize(position); // ***********

//float n_dot_1 = max(0.0, dot(normalize(normal), position));

vec3 lightDiffuse = lightColor;

vec3 surfaceDiffuse = surfaceColor;

//vec3 diffuse = (surfaceDiffuse * lightDiffuse) * n_dot_1;

//gl_FragColor=vec4(ambient + diffuse, 1.0);

gl_FragColor=vec4(ambient, 1.0);

}

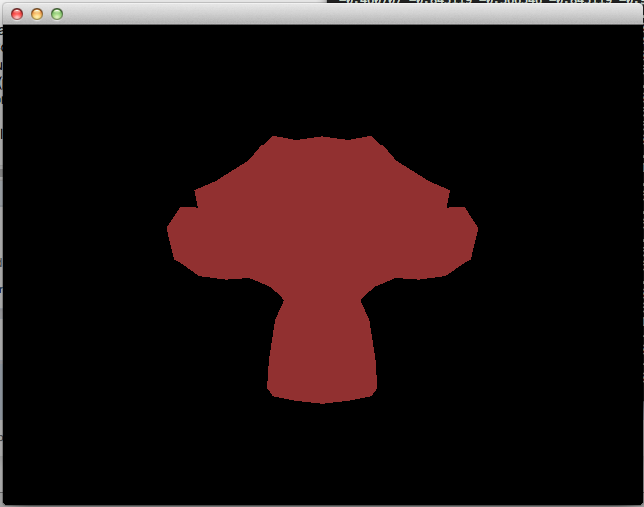

*********** When this line is uncommented everything runs fine and the fragment colour is correct:

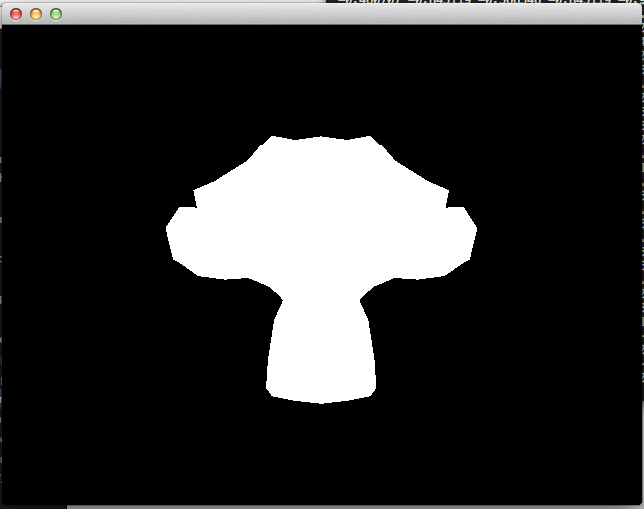

However, if that line is uncommented then this is what happens;

Why is this? Is it because my normals VBO passing is wrong? Is it because of the way I've written the shader itself. It's almost like the varying variable is causing the fragment shader to crash and each fragment is being defaulted as (1.0, 1.0, 1.0, 1.0). The following code is my implimentation for the VBO's.

Mesh.h:

#ifndef MESH_H

#define MESH_H

#include <string>

#include <iostream>

#include <fstream>

#include <sstream>

#include <vector>

#include <cml/cml.h>

#include <GL/glfw.h>

typedef cml::vector3f vec3f;

class Mesh

{

std::vector<vec3f> vertexList;

std::vector<vec3f> normalList;

std::vector<GLuint> indexList;

std::vector<GLuint> normalIndexList;

GLuint vbo[3];

public:

Mesh(const std::string& fileName);

void init();

void display();

};

#endif

Mesh.cpp:

#include "Mesh.h"

Mesh::Mesh(const std::string& fileName)

{

std::string s;

// std::ifstream file(fileName);

std::ifstream file("untitled.obj");

std::string line;

while( std::getline(file, line)) {

std::istringstream iss(line);

std::string result;

if (std::getline( iss, result , ' ')) {

if (result == "v") {

float f;

vertexList.push_back(vec3f(0, 0, 0));

for (int i = 0; i < 3; i++) {

iss >> f;

vertexList.back()[i] = f;

}

} else if (result == "vn") {

float f;

normalList.push_back(vec3f(0, 0, 0));

for (int i = 0; i < 3; i++) {

iss >> f;

normalList.back()[i] = f;

}

} else if (result == "f") {

while (std::getline(iss, s, ' ')) {

std::istringstream indexBlock(s);

for (int i = 0; i < 3; i++) {

std::string intString;

if (std::getline(indexBlock, intString, '/')) {

std::istringstream sstream(intString);

int index = -1;

sstream >> index;

if (!(index == -1)) {

if (i == 0) {

indexList.push_back(index - 1);

} else if (i == 1) {

} else if (i == 2) {

normalIndexList.push_back(index - 1);

}

}

}

}

}

}

}

}

std::cout << "Loaded " << fileName << std::endl;

}

void Mesh::init()

{

GLfloat tmp_normals[normalList.size()][3];

unsigned int index = 0;

for (int c = 0; c < indexList.size(); c++) {

tmp_normals[indexList.at©][0] = normalList.at(normalIndexList.at©)[0];

tmp_normals[indexList.at©][1] = normalList.at(normalIndexList.at©)[1];

tmp_normals[indexList.at©][2] = normalList.at(normalIndexList.at©)[2];

std::cout << normalList.at(normalIndexList.at©)[0] << " ";

}

glGenBuffers(3, vbo);

glBindBuffer(GL_ARRAY_BUFFER, vbo[0]); // vertices

glBufferData(GL_ARRAY_BUFFER, sizeof(float) * 3 * vertexList.size(), (const GLvoid*)& vertexList.front(), GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, vbo[1]); // normals

glBufferData(GL_ARRAY_BUFFER, sizeof(float) * 3 * normalList.size(), (const GLvoid*)& tmp_normals[0], GL_STATIC_DRAW);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, vbo[2]); // indices

glBufferData(GL_ELEMENT_ARRAY_BUFFER, indexList.size() * sizeof(GLuint), (const GLvoid*)& indexList.front(), GL_STATIC_DRAW);

}

void Mesh::display()

{

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_NORMAL_ARRAY);

glBindBuffer(GL_ARRAY_BUFFER, vbo[0]); // vertices

glVertexPointer(3, GL_FLOAT, 0, 0);

glBindBuffer(GL_ARRAY_BUFFER, vbo[1]); // normals

glNormalPointer(GL_FLOAT, 0, 0);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, vbo[2]); // indices

glDrawElements(GL_TRIANGLES, indexList.size(), GL_UNSIGNED_INT, 0);

glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_NORMAL_ARRAY);

}

Thank you.