The new robots.txt tester that is now built into Google Search Console is finding errors with the last two rows of my robots.txt file:

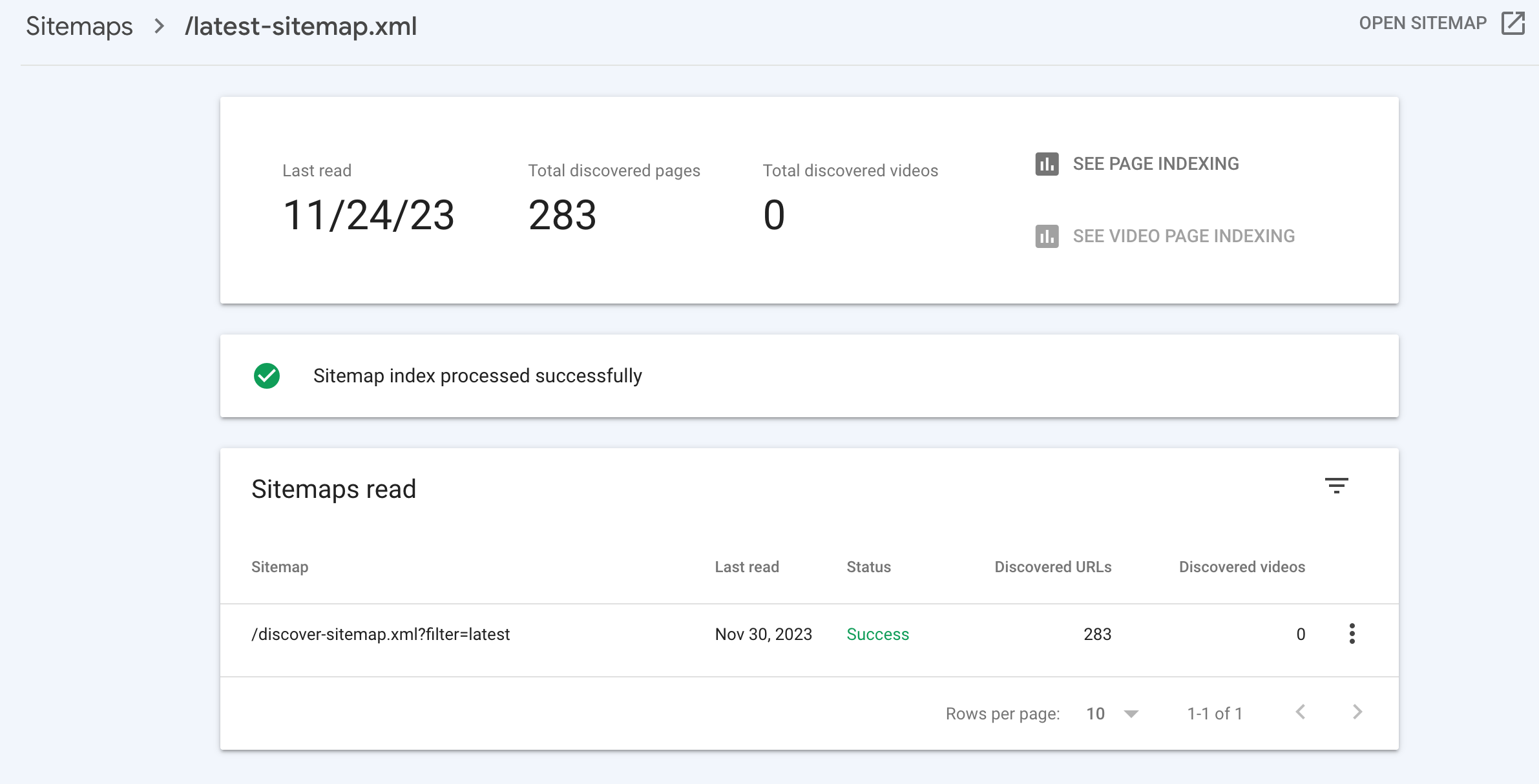

Sitemap: https://www.daniweb.com/latest-sitemap.xml

Sitemap: https://www.daniweb.com/sitemap.xmlThe error is: Invalid sitemap URL detected; syntax not understood

Any idea what could be wrong here?