On March 4, 2024, Anthropic launched the Claude 3 family of large language models. Anthropic claimed that its Claude 3 Opus model outperforms GPT-4 on various benchmarks.

Intrigued by Anthropic's claim, I performed a simple test to compare the performances of Claude 3 Opus, Google Gemini Pro, and OpenAI's GPT-4 for zero-shot text classification. This article explains the experiment and the results obtained, along with my personal observations.

Note: I have already compared the performance of Google Gemini Pro and Chat-GPT on another dataset, in one of my previous articles. This article adds Claude 3 Opus to the list of compared models. In addition, the tests are performed on a significantly more difficult dataset.

So, let's begin without an ado.

Importing and Installing Required Libraries

The following script installs the corresponding APIs for importing Claude 3 Opus, Google Gemini Pro, and OpenAI GPT-4 models.

!pip install anthropic

!pip install --upgrade google-cloud-aiplatform

!pip install openai

The script below imports the required libraries.

import os

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import anthropic

from openai import OpenAI

import vertexai

from vertexai.preview.generative_models import GenerativeModel, Part

Importing and Preprocessing the Dataset

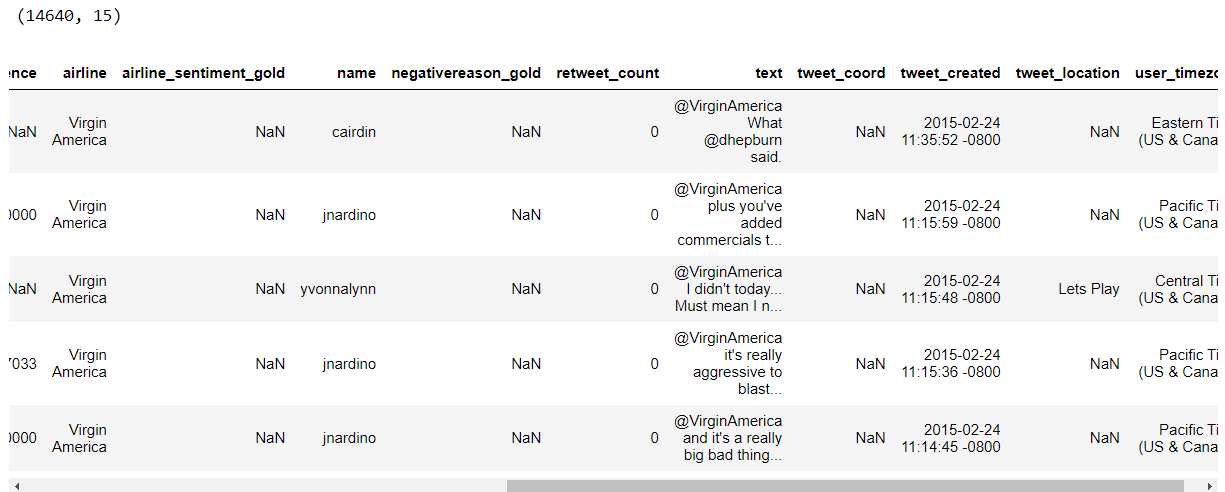

We will use LLMs to make zero-shot predictions on the US Airline Sentiment dataset, which you can download from Kaggle.

The dataset consists of tweets regarding various US airlines. The tweets are manually annotated for positive, negative, or neutral sentiments. The text column contains the tweet texts, while the airline_sentiment column contains sentiment labels.

The following script imports the dataset, prints the dataset shape, and displays the dataset's first five rows.

## Dataset download link

## https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment?select=Tweets.csv

dataset = pd.read_csv(r"D:\Datasets\tweets.csv")

print(dataset.shape)

dataset.head()

Output:

The dataset originally consisted of 14640 records. However, for comparison, we will randomly select 100 records with equal proportion of tweets belonging to each sentiment category, e.g., 34 for neutral and 33 each for positive and negative sentiments. The following script selects 100 random tweets.

# Remove rows where 'airline_sentiment' or 'text' are NaN

dataset = dataset.dropna(subset=['airline_sentiment', 'text'])

# Remove rows where 'airline_sentiment' or 'text' are empty strings

dataset = dataset[(dataset['airline_sentiment'].str.strip() != '') & (dataset['text'].str.strip() != '')]

# Filter the DataFrame for each sentiment

neutral_df = dataset[dataset['airline_sentiment'] == 'neutral']

positive_df = dataset[dataset['airline_sentiment'] == 'positive']

negative_df = dataset[dataset['airline_sentiment'] == 'negative']

# Randomly sample records from each sentiment

neutral_sample = neutral_df.sample(n=34)

positive_sample = positive_df.sample(n=33)

negative_sample = negative_df.sample(n=33)

# Concatenate the samples into one DataFrame

dataset = pd.concat([neutral_sample, positive_sample, negative_sample])

# Reset index if needed

dataset.reset_index(drop=True, inplace=True)

# print value counts

print(dataset["airline_sentiment"].value_counts())

Output:

airline_sentiment

neutral 34

positive 33

negative 33

Name: count, dtype: int64We are now ready to perform zero-shot classification with various large language models.

Zero Shot Text Classification with Google Gemini Pro

To access the Google Gemini Pro model via the Google Cloud API, you need to create a project in the VertexAI Service Account and download the JSON credentials file for the project. Next, you need to create an environment variable GOOGLE_APPLICATION_CREDENTIALS and set its value to the path of the JSON file you just downloaded.

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = "PATH_TO_VERTEX_AI_SERVICE_ACCOUNT JSON FILE"The rest of the process is straight-forward. You must create an Object of the GenerativeModel class and pass the input query to the model.generate_content() method.

In the following script, we define the find_sentiment_gemini() function, which accepts a tweet and returns its sentiment. Pay attention to the content variable. It contains the prompt we will pass to our Google Gemini Pro model. The prompt will also remain the same for the rest of the models.

model = GenerativeModel("gemini-pro")

config = {

"max_output_tokens": 10,

"temperature": 0.0,

}

def find_sentiment_gemini(tweet):

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

responses = model.generate_content(

content,

generation_config= config,

stream=True,

)

for response in responses:

return response.text

Finally, we iterate through all the tweets from the text column in our dataset and pass the tweets to the find_sentiment_gemini() method. The response is saved in the all_sentiments list. We time the script to see how long it takes to execute.

%%time

all_sentiments = []

tweets_list = dataset["text"].tolist()

i = 0

exceptions = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

sentiment_value = find_sentiment_gemini(tweet)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

print("Total exception count:", exceptions)

Output:

Total exception count: 0

CPU times: total: 312 ms

Wall time: 54.5 s

The about output shows that the script took 54.5 seconds to run.

Finally, you can calculate model accuracy by comparing the values in the airline_sentiment columns of the dataset with the all_sentiments list.

accuracy = accuracy_score(all_sentiments, dataset["airline_sentiment"])

print("Accuracy:", accuracy)

Output:

Accuracy: 0.78

You can see that the model achieves 78% accuracy.

Zero Shot Text Classification with GPT-4

Let's now perform zero-shot classification with GPT-4. The process remains the same. We will first create an OpenAI client using the OpenAI API key.

Next, we define the find_sentiment_gpt() function, which internally calls the OpenAI.chat.completions.create() method to generate a response for the input tweet.

client = OpenAI(

# This is the default and can be omitted

api_key = os.environ.get('OPENAI_API_KEY'),

)

def find_sentiment_gpt(tweet):

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

sentiment = client.chat.completions.create(

model= "gpt-4",

temperature = 0,

max_tokens = 10,

messages=[

{"role": "user", "content": content}

]

)

return sentiment.choices[0].message.content

Next, we iterate through all the tweets and pass each tweet to the find_sentiment_gpt() function. The responses are stored in the all_sentiments list.

%%time

all_sentiments = []

tweets_list = dataset["text"].tolist()

i = 0

exceptions = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

sentiment_value = find_sentiment_gpt(tweet)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

print("Total exception count:", exceptions)

Output:

Total exception count: 0

CPU times: total: 250 ms

Wall time: 49.4 s

The GPT-4 model took 49.4 seconds to process 100 tweets.

The following script prints the model's accuracy.

accuracy = accuracy_score(all_sentiments, dataset["airline_sentiment"])

print("Accuracy:", accuracy)Output:

Accuracy: 0.79

The output shows that GPT-4 achieves a slightly better accuracy (79%) than Google Gemini Pro (78%).

Zero shot Text Classification with Claude 3 Opus

Finally, let's try the so-called best, Claude 3 Opus. To generate text using Claude 3, you need to create a client object of the anthropic.Anthropic class and pass it your Anthropic API Key, which you can retrieve by signing up for Claude console.

You can call the message.create() method from the Anthropic client to generate a response.

The following script defines the find_sentiment_claude() method that returns the sentiment of a tweet using the Claude Opus 3 model.

client = anthropic.Anthropic(

# defaults to os.environ.get("ANTHROPIC_API_KEY")

api_key = os.environ.get('ANTHROPIC_API_KEY')

)

def find_sentiment_claude(tweet):

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

sentiment = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1000,

temperature=0.0,

messages=[

{"role": "user", "content": content}

]

)

return sentiment.content[0].text

We can pass all the tweets to the find_sentiment_claude() function and store the corresponding responses in the all_sentiments list. Finally, we can compare response predicitons with the actual sentiment labels to calculate the model's accuracy.

%%time

all_sentiments = []

tweets_list = dataset["text"].tolist()

i = 0

exceptions = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

sentiment_value = find_sentiment_claude(tweet)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

print("Total exception count:", exceptions)

accuracy = accuracy_score(all_sentiments, dataset["airline_sentiment"])

print("Accuracy:", accuracy)

Output:

Total exception count: 0

Accuracy: 0.71

CPU times: total: 141 ms

Wall time: 3min 8s

The above output shows that the Claude Opus took 3 minutes and 8 seconds to process 100 tweets and achieved an accuracy of only 71%, substantially lower than GPT-4 and Gemini Pro. Given Anthropic's big claims, I was not impressed.

Conclusion

The results from the experiments in this article show that despite Anthropic's high claims, the performance of Claude Opus 3 for a simple task such as zero-shot text classification was not up to the mark. I would still use GPT-4 or Gemini Pro for zero-shot text classification tasks.