There is this function:

function disguise_curl($url)

{

$curl = curl_init();

// setup headers - used the same headers from Firefox version 2.0.0.6

// below was split up because php.net said the line was too long. :/

$header[0] = "Accept: text/xml,application/xml,application/xhtml+xml,";

$header[0] .= "text/html;q=0.9,text/plain;q=0.8,image/png,*/*;q=0.5";

$header[] = "Cache-Control: max-age=0";

$header[] = "Connection: keep-alive";

$header[] = "Keep-Alive: 300";

$header[] = "Accept-Charset: ISO-8859-1,utf-8;q=0.7,*;q=0.7";

$header[] = "Accept-Language: en-us,en;q=0.5";

$header[] = "Pragma: "; //browsers keep this blank.

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_USERAGENT, 'Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3');

curl_setopt($curl, CURLOPT_HTTPHEADER, $header);

curl_setopt($curl, CURLOPT_REFERER, 'http://www.google.com');

curl_setopt($curl, CURLOPT_ENCODING, 'gzip,deflate');

curl_setopt($curl, CURLOPT_AUTOREFERER, true);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($curl, CURLOPT_TIMEOUT, 10);

$html = curl_exec($curl); //execute the curl command

if (!$html)

{

echo "cURL error number:" .curl_errno($ch);

echo "cURL error:" . curl_error($ch);

exit;

}

curl_close($curl); //close the connection

return $html; //and finally, return $html

}...that several people seem to use to scrape content off a website (to state the obvious, you would do "echo disguise_curl($url)").

Is there any way to detect if someone is doing that to my site, and block access to them or show a page with a specific message?

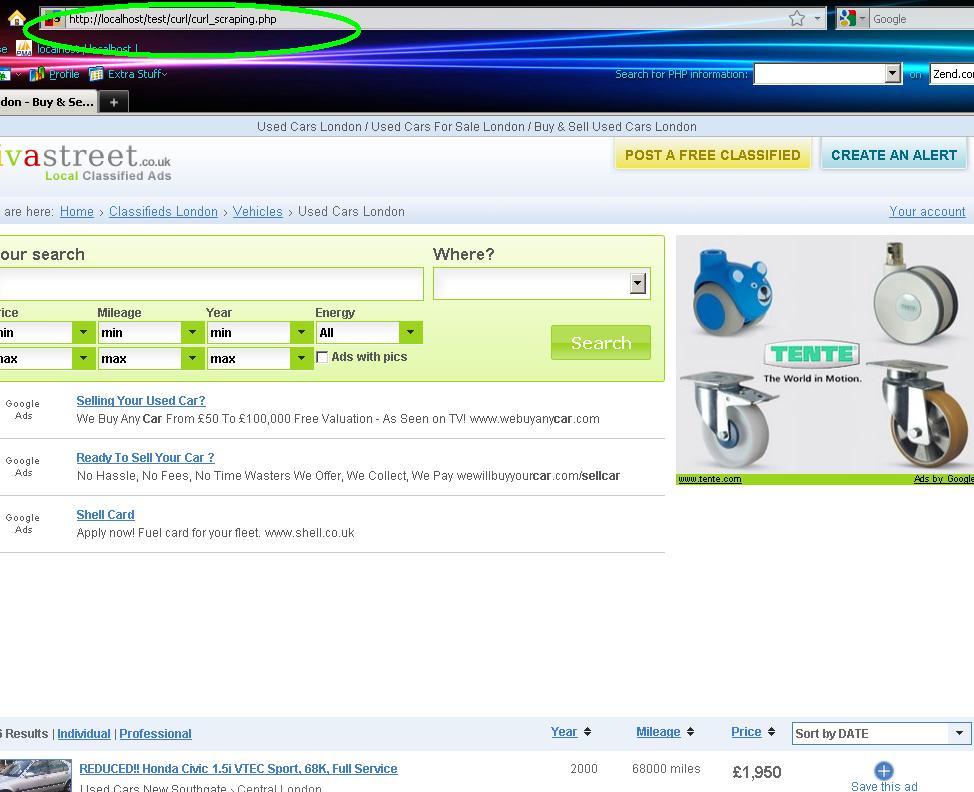

I've experimented with some sites to see if they manage to block access this way, and found http://london.vivastreet.co.uk manages to do that. I haven't been able to figure out how, but maybe someone can.

A second query: Why would someone write a complicated function like that when get_file_contents($url) does the same? Is it to avoid suspicion?

Thank you very much for your time.