In my previous article, I explained how I developed a simple chatbot using LangChain and Chat-GPT that can answer queries related to Paris Olympics ticket prices.

However, one major drawback with that chatbot is that it can only generate a single response based on user queries. It can not answer follow-up questions. In short, the chatbot has no memory where it can store previous conversations and answer questions based on the information in the past conversation.

In this article, I will explain how to add memory to this chatbot and execute conversations where the chatbot can respond to queries considering the past conversation.

So, let's begin without further ado.

Installing and Importing Required Libraries

The following script installs the required libraries for this article.

!pip install -U langchain

!pip install langchain-openai

!pip install pypdf

!pip install faiss-cpu

The script below imports required libraries.

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains import create_retrieval_chain

from langchain_core.documents import Document

from langchain.chains import create_history_aware_retriever

from langchain_core.prompts import MessagesPlaceholder

from langchain_core.messages import HumanMessage, AIMessage

import os

Paris Olympics Chatbot for Generating a Single Response

Let me briefly review how we developed a chatbot capable of generating a single response and its associated problems.

The following script creates an object of the ChatOpenAI llm with the GPT-4 model, a model that powers Chat-GPT.

openai_key = os.environ.get('OPENAI_KEY2')

llm = ChatOpenAI(

openai_api_key = openai_key ,

model = 'gpt-4',

temperature = 0.5

)

Next, we import and load the official PDF containing the Paris Olympics ticket information.

loader = PyPDFLoader("https://tickets.paris2024.org/obj/media/FR-Paris2024/ticket-prices.pdf")

docs = loader.load_and_split()

We then split our PDF document and create embeddings for the different chunks of information in the PDF document. We store the embeddings in a vector database.

embeddings = OpenAIEmbeddings(openai_api_key = openai_key)

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

vector = FAISS.from_documents(documents, embeddings)

Subsequently, we create a ChatPromptTemplate object that accepts our input query and context information extracted from the PDF document. We create a documents_chain chain that passes the input prompt to our LLM model.

from langchain.chains.combine_documents import create_stuff_documents_chain

prompt = ChatPromptTemplate.from_template("""Answer the following question based only on the provided context:

Question: {input}

Context: {context}

"""

)

document_chain = create_stuff_documents_chain(llm, prompt)

Next, we create our vector database retriever, which retrieves information from the vector database based on the input query.

Finally, we create our retrieval_chain that accepts the retriever and document_chain as parameters and returns the final response from an LLM.

retriever = vector.as_retriever()

retrieval_chain = create_retrieval_chain(retriever, document_chain)

We define a function, generate_response(), which accepts a user's input query and returns a chatbot response.

def generate_response(query):

response = retrieval_chain.invoke({"input": query})

print(response["answer"])

Let's ask a query about the lowest-priced tickets for tennis games.

query = "What is the lowest ticket price for tennis games?"

generate_response(query)

Output:

The lowest ticket price for tennis games is €30.

The model responded correctly.

Let's now ask for a follow-up query. The following query clearly conveys that we want to get information about the lowest-priced tickets for volleyball games.

However, since the chatbot does not have any memory to store past conversations, it treats the following query as a new standalone query. The response is different from what we aim for.

query = "And for beach volleyball?"

generate_response(query)

Output:

Beach Volleyball is played by two teams of two players each. They face off in the best of three sets on a sand court that is 16m long and 8m wide. The net is at the same height as indoor volleyball (2.24m for women and 2.43m for men). The game is contested by playing two sets to 21 points, and teams must win at least two points more than their opponents to win the set. If needed, the third set is played to 15 points. The matches take place at the Eiffel Tower Stadium in Paris.

Let's pass another query.

query = "And what is the category of this ticket?"

generate_response(query)

Output:

The context does not provide specific information on the category of the ticket.

The time model refuses to return any information.

If you have conversed with Chat-GPT, you would have noticed that it responds to follow-up questions. In the next section, you will see how to add memory to your chatbot to track past conversations.

Adding Memory to Paris Olympics Chatbot

We will create two chat templates and three chains.

The first chat template will accept user input queries and the message history and return the matching documents from our vector database. The template will have a MessagesPlaceholder attribute to store our previous chat.

We will also define history_retriever_chain, which takes the chat template we defined earlier and returns the matched document.

The following script defines our first template and chain.

prompt = ChatPromptTemplate.from_messages([

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}"),

("user", "Given the above conversation, generate a search query to look up in order to get information relevant to the conversation")

])

history_retriever_chain = create_history_aware_retriever(llm, retriever, prompt)

You can test the above chain using the following script. The chat_history list will contain HumanMessage and AIMessage objects corresponding to user queries and chatbot responses.

Next, while invoking the history_retriever_chain object, we pass the user input and the chat history.

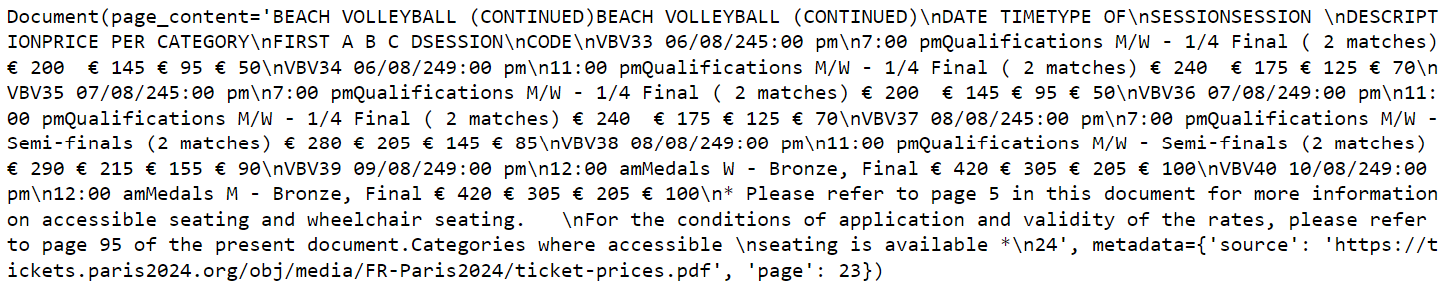

In the response, you will see the matched documents returned by the retriever. As an example, I have only printed the first document. If you look carefully, you will see the ticket prices for the beach volleyball games. We will pass this information on to our next chain, which will return the final response.

chat_history = [

HumanMessage(content="What is the lowest ticket price for tennis games?"),

AIMessage(content="The lowest ticket price for tennis games is €30.")

]

result = history_retriever_chain.invoke({

"chat_history": chat_history,

"input": "And for Beach Volleyball?"

})

result[0]

Output:

Let's now define our second prompt template and chain. This prompt template will receive user input and message history from the user and context information from the history_retriever_chain chain. We will also define the corresponding document chain that invokes a response to this prompt.

prompt = ChatPromptTemplate.from_messages([

("system", "Answer the user's questions based on the below context:\n\n{context}"),

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}")

])

document_chain = create_stuff_documents_chain(llm, prompt)

Finally, we will sequentially chain the history_retriever_chain, and the document_chain together to create our final create_retrieval_chain.

We will pass the chat history and user input to this retrieval_chain, which first fetches the context information using the chat history from the history_retriever_chain. Next, the context retrieved from the history_retriever_chain, along with the user input and chat history, will be passed to the document_chain to generate the final response.

Since we already have some messages in the chat history, we can test our retrieval_chain using the following script.

retrieval_chain = create_retrieval_chain(history_retriever_chain, document_chain)

result = retrieval_chain.invoke({

"chat_history": chat_history,

"input": "And for Beach Volleyball?"

})

print(result['answer'])

Output:

The lowest ticket price for Beach Volleyball games is €24.In the above output, you can see that Chat-GPT successfully generated a response to a follow-up question.

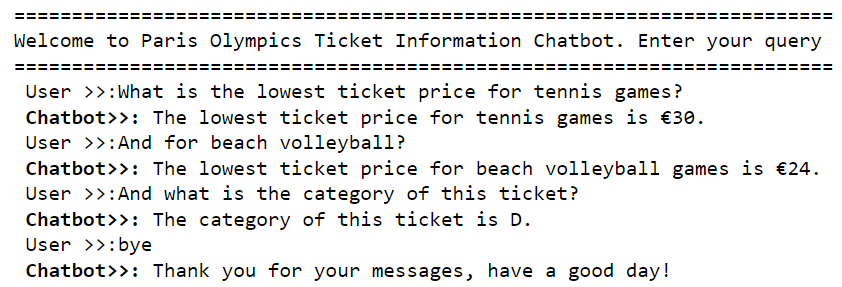

Putting it All Together - A Command Line Chatbot

To create a simple command-line chatbot, we will instantiate an empty list to store our past conversations.

Next, we define the generate_response_with_memory() function that accepts the user query as an input parameter and invokes the retrieval_chain to generate a model response.

Inside the generate_response_with_memory() function, we create HumanMessage and AIMessage objects using the user queries and chatbot responses and add them to the chat_history list.

chat_history = []

def generate_response_with_memory(query):

result = retrieval_chain.invoke({

"chat_history": chat_history,

"input": query

})

response = result['answer']

chat_history.extend([HumanMessage(content = query),

AIMessage(content = response)])

return response

Finally, we can execute a while loop that asks users to enter queries as console inputs. If the input contains the string bye, we empty the chat_history list, print a goodbye message and quit the loop.

Otherwise, the query is passed to the generate_response_with_memory() function to generate a chatbot response.

Here is the script and a sample output.

print("=======================================================================")

print("Welcome to Paris Olympics Ticket Information Chatbot. Enter your query")

print("=======================================================================")

query = ""

while query != "bye":

query = input("\033[1m User >>:\033[0m")

if query == "bye":

chat_history = []

print("\033[1m Chatbot>>:\033[0m Thank you for your messages, have a good day!")

break

response = generate_response_with_memory(query)

print(f"\033[1m Chatbot>>:\033[0m {response}")

Output:

Conclusion

A conversational chatbot keeps track of the past conversation. In this article, you saw how to create a Paris Olympics ticket information chatbot that answers user queries and follow-up questions using LangChain and Chat-GPT. You can use the same approach to develop conversational chatbots for other problems.

Feel free to leave your feedback in the comments.