As a researcher, I have often found myself buried under a mountain of research articles, each promising insights and breakthroughs crucial for my work. The sheer volume of information is overwhelming, and the time it takes to extract the relevant data can be daunting.

However, extracting meaningful information from research papers has become increasingly easier with the advent of large language models. Nevertheless, interacting with large language models, particularly for querying custom data, can be tricky since it requires intricate code.

Fortunately, with the introduction of the Python Langchain module, you can query complex language models such as OpenAI's GPT-4 in just a few lines of code, offering a lifeline to those of us who need to sift through extensive research quickly and efficiently.

This article will explore how the Python Langchain module can be leveraged to extract information from research papers, saving precious time and allowing us to focus on innovation and analysis. You can employ the process explained in this article to extract information from any other PDF document.

Downloading and Importing Required Libraries

Before diving into automated information extraction, we must set up our environment with the necessary tools. Langchain, OpenAI, PyPDF2, faiss-cpu, and rich are the libraries that will form the backbone of our extraction process. Each serves a unique purpose:

-

Langchain: Facilitates the access and chaining of language models and vector space models to perform complex tasks.

-

OpenAI: Provides access to OpenAI’s powerful language models.

-

PyPDF2: A library for reading PDF files and extracting text content.

-

faiss-cpu: A library for efficient similarity search and clustering of dense vectors.

-

rich: A Python library for rich text and beautiful formatting in the terminal.

The installation process is straightforward, using pip commands:

!pip install langchain

!pip install openai

!pip install PyPDF2

!pip install faiss-cpu

!pip install tiktoken

!pip install rich

Once installed, we import the necessary classes from the modules.

from PyPDF2 import PdfReader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import FAISS

Finally, we set our OpenAI API key. Replace your OpenAI API key in the following script:

import os

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY_HERE"

Reading, Chunking and Vectorizing PDF Documents

The first step in our journey is to read the PDF document. We use PdfReader from PyPDF2 to open the file and extract its text content.

For the sake of example in this article, I will extract information from this research paper.

pdf_reader = PdfReader(r'D:\Datasets\1907.11692.pdf')

Once we have the reader object, we iterate through the pages, extracting the text and concatenating it into a single string:

from typing_extensions import Concatenate

pdf_text = ''

for i, page in enumerate(pdf_reader.pages):

page_content = page.extract_text()

if page_content:

pdf_text += page_content

pdf_text

Output:

The above output shows an extract of text from the research paper.

The resulting pdf_text variable contains the entire text of the PDF document. However, processing this large text block can be challenging for language models, which often have token limits. To address this, we use CharacterTextSplitter from the Langchain module to divide the text into manageable chunks.

splitter = CharacterTextSplitter(

separator = "\n",

chunk_size = 1000,

chunk_overlap = 200,

length_function = len,

)

text_chunks = splitter.split_text(pdf_text)

print(f"Total chunks {len(text_chunks)}")

print("============================")

print(text_chunks[0])

Output:

The splitter creates chunks of text, each 1000 characters long, with a 200-character overlap between consecutive chunks. This overlap ensures that no information is lost at the boundaries of the chunks.

From the above output, you can see the total number of chunks and the text of the first chunk.

Next, we vectorize these text chunks using OpenAI’s embeddings and FAISS for efficient similarity search.

embeddings = OpenAIEmbeddings()

embedding_vectors = FAISS.from_texts(text_chunks, embeddings)

The embedding_vectors now represent our document in a vector space, ready for similarity searches based on queries.

Extracting Information from Research Papers

With our document vectorized, we can now extract information from our research paper. We load a question-answering chain using Langchain’s load_qa_chain function and OpenAI’s language model:

from langchain.chains.question_answering import load_qa_chain

from langchain.llms import OpenAI

qa_chain = load_qa_chain(OpenAI(),

chain_type="stuff")

You can now ask questions about the content of the research paper. To do so, you must pass the question string to the similarity_search() method of the embedding_vectors object.

The similarity_search() method finds the most relevant chunks of text for our question.

Next, you need to execute the qa_chain using the run() method. The run() method accepts the chunks returned by the similarity_search() method and the question string as parameter values.

The qa_chain processes the similarity chunks and input questions to provide an answer.

Let's now see some examples.

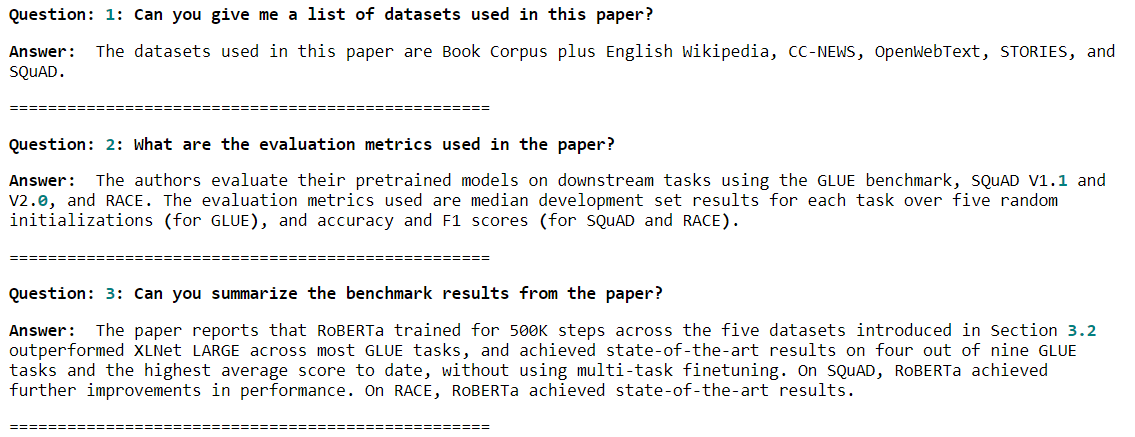

The following script successfully returns the list of datasets used in the paper. (You can verify the response by reviewing section 3.2 of the research paper we are analyzing).

question = "Can you give me a list of datasets used in this paper?"

research_paper = embedding_vectors.similarity_search(question)

qa_chain.run(input_documents = research_paper,

question = question)

Output:

' The datasets used in this paper include Book Corpus and English Wikipedia, CC-NEWS, OpenWebText, and Stories.'

Similarly, the script below summarizes the results from the paper.

question = "Can you summarize the benchmark results from the paper?"

research_paper = embedding_vectors.similarity_search(question)

qa_chain.run(input_documents = research_paper,

question = question)

Output:

' The paper found that RoBERTa trained for 500K steps outperformed XLNet LARGE across most tasks on GLUE, SQuaD, and RACE. On GLUE, RoBERTa achieved state-of-the-art results on all 9 of the tasks in the first setting (single-task, dev) and 4 out of 9 tasks in the second setting (ensembles, test), and had the highest average score to date. On SQuAD and RACE, RoBERTa trained for 500K steps achieved significant gains in downstream task performance.'

To streamline the process, we define a method get_answer() that takes a list of questions and returns their answers.

def get_answer(questions):

answers = []

for question in questions:

research_paper = embedding_vectors.similarity_search(question)

answer = qa_chain.run(input_documents = research_paper,

question = question)

answers.append(answer)

return answers

Finally, we use the rich module to print the questions and their corresponding answers in a formatted manner.

from rich import print

questions = ["Can you give me a list of datasets used in this paper?",

"What are the evaluation metrics used in the paper?",

"Can you summarize the benchmark results from the paper?"]

answers = get_answer(questions)

for i in range(len(questions)):

print(f"[bold]Question: {i+1}: {questions[i]} [/bold]")

print(f"[bold]Answer:[/bold] {answers[i]}")

print("==================================================")

Output:

Conclusion

In conclusion, the Python Langchain module provides a powerful combination for extracting information from PDF documents such as research papers. By automating the process of reading, chunking, vectorizing, and querying documents, we can significantly reduce the time spent on literature review, allowing us to focus on the creative and analytical aspects of our work. The future of research is here, and it’s automated, efficient, and accessible.