In the rapidly evolving field of Natural Language Processing (NLP), open-source large language models (LLMs) are becoming increasingly popular as they are free to use. Among these, the Mistral family of models stands out as a state-of-the-art model that is freely accessible to the public.

Comparable in performance to the renowned GPT 3.5, Mistral 7b enables users to perform various NLP tasks, such as text generation, text classification, and more, without any cost.

While GPT 3.5 can be used for free in a browser, utilizing its functions in a Python application via OpenAI API incurs charges. This is where open-source Large Language Models (LLMs) like Mistral 7b become game-changers.

This article will explore leveraging the Mistral 7b Instruct model (seven billion parameters) to execute seven common NLP tasks within your Python applications using the HuggingFace library. So, let’s dive in without further ado.

Importing and Installing Required Libraries

The following script installs the libraries required to run scripts in this article.

!pip install git+https://github.com/huggingface/transformers

!pip3 install -q -U bitsandbytes==0.42.0

!pip3 install -q -U accelerate==0.27.1

Since I am using Google Colab to run the scripts in this article, the rest of the libraries are pre-installed in the environment.

The following script imports the required libraries.

from transformers import AutoModelForCausalLM, AutoTokenizer, logging

from transformers import BitsAndBytesConfig

import torch

Importing and Configuring the Mistral 7b Instruct Model

Mistral 7b is a large model with seven billion parameters. We will quantize it by reducing its weight precisions to four bits. This allows us to fit Mistral 7b in low-memory hardware. The following script defines weight precisions for our Mistral 7b model.

#Ignore warnings

logging.set_verbosity(logging.CRITICAL)

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

Next, we import the Mistral-7B-Instruct-v0.1 model, a variant of the Mistral 7b model trained to handle chatbot use cases. The script below imports this model and input tokenizer from the HuggingFace library. We pass the quantization configuration settings to the quantization_config parameter of the from_pretrained() method.

model_id = "mistralai/Mistral-7B-Instruct-v0.1"

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained(model_id,

quantization_config=bnb_config,

device_map={"":0})

tokenizer = AutoTokenizer.from_pretrained(model_id)

We are now ready to interact with the Mistral 7b model. Let's see how to perform various NLP tasks with Mistral.

1. Question Answering with Mistral 7b

We will define a general purpose function generate_response() that accepts the following parameters:

- the input text.

- the number of response tokens (maximum characters in response)

- the model temperature (the measure of a model's creativity)

Inside the generate_response function, we wrap the input text in a message list, convert the messages to the required format using the tokenizer.apply_chat_template() method, and pass the encoded inputs to the model.generate() method.

The model's response contains both the input text and the corresponding response. We will extract the response text only by splitting the string using the [/INST] substring.

def generate_response(input_text, response_tokens, temperature):

messages = [

{"role": "user", "content": input_text},

]

encodeds = tokenizer.apply_chat_template(messages, return_tensors="pt")

model_inputs = encodeds.to(device)

generated_ids = model.generate(model_inputs,

max_new_tokens=response_tokens,

temperature = temperature,

do_sample=True)

decoded = tokenizer.batch_decode(generated_ids)

return decoded[0].split("[/INST]")[1].rstrip("</s>")

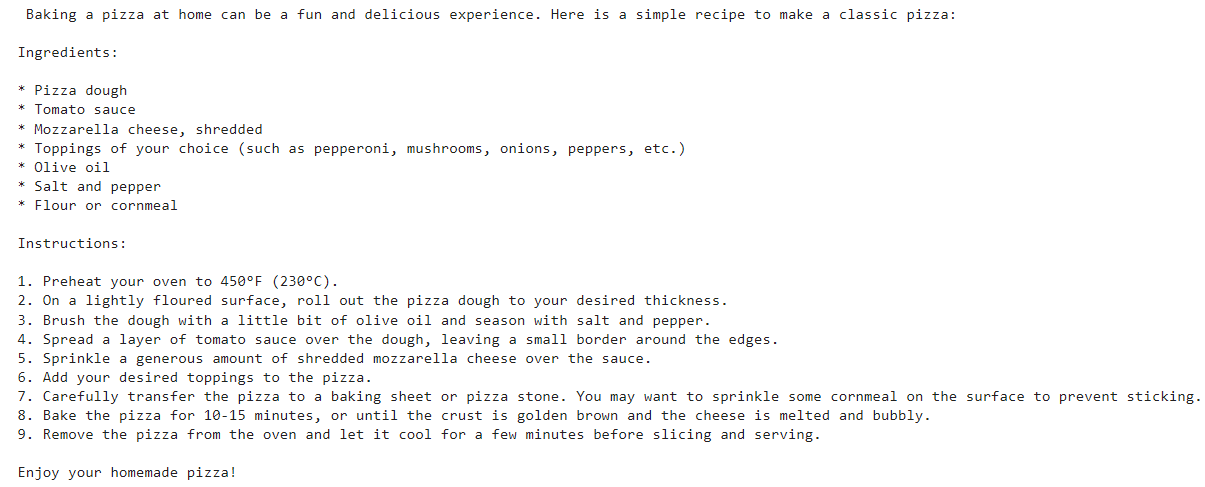

Let's now ask our model a simple question. We will ask the model to tell us the recipe to bake a Pizza. Notice that we set the temperature value to 0.1 since we want the model to be more accurate than creative.

input_text = "How to bake a pizza?"

response = generate_response(input_text, 1000, 0.1)

print(response)

Output:

2. Text Summarization with Mistral 7b

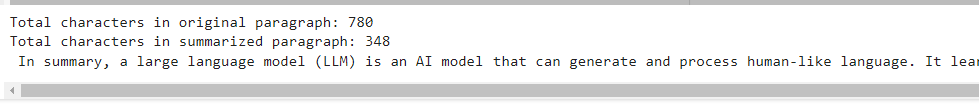

Let's now perform text summarization. In this case, we set the model temperature to a higher value to allow the model to be more creative in summarizing the input paragraph.

paragraph = """

A large language model (LLM) is a language model notable for its ability to achieve general-purpose language generation and other natural language processing tasks such as classification.

LLMs acquire these abilities by learning statistical relationships from text documents during a computationally intensive self-supervised and semi-supervised training process.[1]

LLMs can be used for text generation, a form of generative AI, by taking an input text and repeatedly predicting the next token or word.[2] LLMs are artificial neural networks.

The largest and most capable are built with a decoder-only transformer-based architecture while some recent implementations are based on other architectures, such as recurrent neural network variants and Mamba (a state space model)

"""

print(f"Total characters in original paragraph: {len(paragraph)}")

input_text = f"Summarize the following text: {paragraph}"

response = generate_response(input_text, 500, 0.9)

print(f"Total characters in summarized paragraph: {len(response)}")

print(response)

Output:

You will see that the model generates a very accurate summary.

3. Text Classification with Mistral 7b

Text classification involves assigning a label or category to an input text. In the following script, we ask the model to assign a sentiment label to the input review.

We ask the model to return one of the three possible output labels. To receive a short response, we set the number of output tokens to 10. In addition, we set the temperature to 0.1 to get a more certain and accurate response.

review = """

I enjoyed the movie but found it very long at times with boring scenes.

"""

input_text = f"Find the sentiment of this review, your response should only contain single word 'positive', 'negative', or 'neutral: {review}"

response = generate_response(input_text, 10, 0.1)

print(response)

Output:

neutral

The above output shows that the model has successfully guessed the label for the input review.

4. Text Translation with Mistral 7b

Text translation is another common task that you can perform with Mistral 7b shows. Again, I recommend setting the temperature to a lower value for text translation tasks. Here is an example:

input = """

I am feeling hugry, I think I should go out and have lunch.

"""

input_text = f"Translate the following into French. The response should only contain the French translation: {input}"

response = generate_response(input_text, 100, 0.1)

print(response)

Output:

Je suis fâché, je pense que je devrais sortir et avoir déjeuner.

5. Text Generation with Mistral 7b

You can also ask Mistral 7b to generate text for you. For instance, the script asks Mistral to recommend five catchy names for an ice cream parlor. Again, the temperature for such tasks should be higher.

input_text = "Give me 5 catchy names for an ice cream parlor on a beach"

response = generate_response(input_text, 200, 0.9)

print(response)

Output:

1. "Scoops by the Sea"

2. "Beach Bites"

3. "Surfside Scoops"

4. "Wave Wonders"

5. "Ocean Oasis"

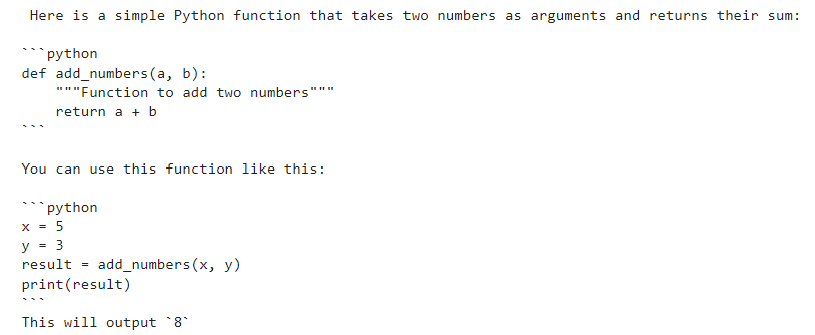

6. Code Generation with Mistral 7b

The Mistral 7b also allows you to generate text, as seen in the following script.

input_text = "Write a Python fuction to add two numbers"

response = generate_response(input_text, 200, 0.1)

print(response)

Output:

Note: Be careful with the code and always verify it. In case of an error, you can again ask Mistral for the solutions.

7. Named Entity Recognition

Another common task you can perform with Mistral is named entity recognition (NER), which involves identifying and classifying critical information (entities) in text into predefined categories such as the names of persons, organizations, locations, etc.

Here is an example of performing NER in Python with Mistral 7b.

input = """

Ronaldo from Portugal was one of the best players Manchester United ever produced in the Premier League"

"""

input_text = f"Extract name entities from the following text. Response should be in the form word -> entity type: {input}"

response = generate_response(input_text, 100, 0.1)

print(response)

Output:

Ronaldo -> Person

Portugal -> Location

Manchester United -> Organization

Premier League -> Event

Conclusion

In this article, you saw how to perform various NLP tasks using the Mistral 7b model in Python. The Mistral series of models is open-source and free to use for commercial purposes. Their performance is at par with GPT 3.5. However, calling GPT 3.5 functions via the OpenAI API incurs a cost. This is where you can use Mistral LLM via HuggingFace API.