In this article, we will compare two state-of-the-art large language models for zero-shot text classification: Google Gemini Pro and OpenAI GPT-4.

Zero-shot text classification is a task where a model is trained on a set of labeled examples but can then classify new examples from previously unseen classes. This is useful for situations where the labeled data is small, or the output classes are dynamic and unpredictable.

We will use the IMDB movie review dataset as an example and try to classify the reviews into positive or negative sentiments without using any labeled data. We will use the results to compare the speed, accuracy, and price of Google Gemini Pro and OpenAI GPT-4. By the end of this tutorial, you will know which model to select for your custom use cases.

Importing and Installing Required Libraries

The first step is to install the required libraries. I ran my code on Google Colab. Therefore, I only needed to install the Google Cloud and OpenAI APIs. The following script installs these libraries.

Note: It is important to mention that you must create an account with OpenAI and Google Cloud Vertex AI and get your API keys before running the scripts in this tutorial. OpenAI and Gemini Pro are paid LLMs, but you can get free credits for testing when you sign up.

pip install --upgrade google-cloud-aiplatform

pip install openai

The rest of the libraries come pre-installed with Google Colab.

The following script imports the libraries you will need to run the scripts in this tutorial.

import os

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from openai import OpenAI

import vertexai

from vertexai.preview.generative_models import GenerativeModel, Part

Importing the Dataset

To compare Gemini Pro and GPT-4, we will perform zero-shot classification on IMDB movie reviews. You can download the CSV file from Kaggle.

The following script imports the CSV file into a Pandas DataFrame, shuffles the dataset randomly, and selects only the first 100 rows due to simplicity and cost constraints.

dataset = pd.read_csv(r"D:\Datasets\IMDB Dataset.csv")

dataset = dataset.sample(frac=1).reset_index(drop=True)

dataset = dataset.head(100)

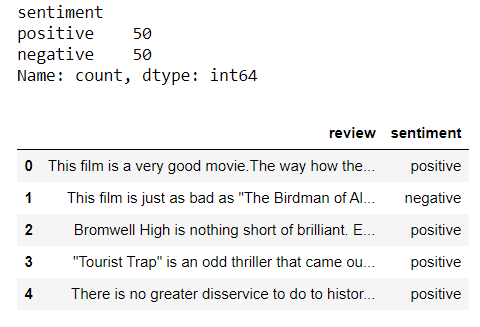

print(dataset['sentiment'].value_counts())

dataset.head()

You can see we have 50 reviews with positive sentiments and 50 reviews with negative sentiments.

Zero Shot Text Classification with Google Gemini Pro

Let's first perform zero-shot classification with the Gemini Pro model. You have first to set an environment variable containing the path to the JSON file containing your Vertex AI Service Account and API Key information. The following script does that.

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = "PATH_TO_VERTEX_AI_SERVICE_ACCOUNT JSON FILE"

Next, We will use the generative mode of Gemini Pro, which can generate natural language responses based on a given prompt. We will define the find_sentiment_gemini() function that takes a movie review as input and returns the sentiment value as output. We will also set some configuration parameters for the generation, such as the maximum output tokens and the temperature. We will also handle any exceptions that may occur during the process.

model = GenerativeModel("gemini-pro")

config = {

"max_output_tokens": 100,

"temperature": 0,

}

def find_sentiment_gemini(review):

content = """What is the sentiment expressed in the following IMDB movie review?

Select sentiment value from positive or negative. Return only the sentiment value in small letters.

Movie review: {}""".format(review)

responses = model.generate_content(

content,

generation_config= config,

stream=True,

)

for response in responses:

return response.text

Next, we will use the following script to loop through the reviews and append the sentiment values to a list.

%%time

all_sentiments = []

reviews_list = dataset["review"].tolist()

i = 0

exceptions = 0

while i < len(reviews_list):

try:

review = reviews_list[i]

sentiment_value = find_sentiment_gemini(review)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

print("Total exception count:", exceptions)

Output:

Total exception count: 0

CPU times: total: 1.23 s

Wall time: 53.3 sThe above output shows that it took 53.3 seconds to process 100 reviews, and no exception occurred.

Finally, we can evaluate the model's performance using the following script.

accuracy = accuracy_score(all_sentiments, dataset["sentiment"])

print("Accuracy:", accuracy)

The Gemini Pro model returns an accuracy of 93%, which is impressive.

Zero Shot Text Classification with GPT-4

Let's perform zero-shot classification on the same dataset using the OpenAI GPT-4 model.

The following script sets the OpenAI API Key.

client = OpenAI(

# This is the default and can be omitted

api_key = os.environ.get('YOUR_OPENAI_KEY'),

)

Next, as we did in the case of Gemini Pro, we will define the find_sentiment_gpt() function that takes a movie review as input and returns the sentiment value as output. We will use the gpt-4 model from OpenAI to predict sentiments.

def find_sentiment_gpt(review):

content = """What is the sentiment expressed in the following IMDB movie review?

Select sentiment value from positive or negative. Return only the sentiment value in small letters.

Movie review: {}""".format(review)

sentiment = client.chat.completions.create(

model= "gpt-4",

temperature = 0,

max_tokens = 100,

messages=[

{"role": "user", "content": content}

]

)

return sentiment.choices[0].message.content

Next, we will loop through all the movie reviews in the dataset, make a sentiment prediction on these reviews using the find_sentiment_gpt() function, and append the response to the all_sentiments list.

%%time

all_sentiments = []

reviews_list = dataset["review"].tolist()

i = 0

exceptions = 0

while i < len(reviews_list):

try:

review = reviews_list[i]

sentiment_value = find_sentiment_gpt(review)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

print("Total exception count:", exceptions)

Total exception count: 0

CPU times: total: 297 ms

Wall time: 2min 38sThe above output shows that it took 2min 38s to process the 100 reviews, three times slower than Gemini Pro.

Finally, the following script prints the accuracy of GPT-4 for zero-shot classification on our dataset.

accuracy = accuracy_score(all_sentiments, dataset["sentiment"])

print("Accuracy:", accuracy)

Accuracy: 0.95The above output shows that GPT-4 achieves an accuracy of 95%, which is 2% greater than Gemini Pro.

Final Verdict

The following table summarizes the results. Though GPT-4 achieved slightly better accuracy, it is slower and almost 30 times more expensive than Gemini Pro. However, I hope that with some prompt engineering, you might be able to achieve better accuracy with Gemini Pro as well.

| Model | Speed | Accuracy | Price |

|-----------|----------|----------|--------------------------------|

| Gemini Pro| 53.3s | 93% | $0.00025 per 1k characters |

| GPT-4 | 2min 38s | 95% | $0.00750 app per 1k characters |

|------------------------------------------------------------------|

To conclude, I suggest you try Gemini Pro with better prompts as it is faster and cheaper than GPT-4.

I would love to read your feedback in the comment section.