Comparison Between Fine-tuned and Default GPT-3 Turbo for Text Classification

In one of my previous articles, I showed you how to perform zero-shot text classification using OpenAI GPT-4o and Meta Llama 3 models. I used the default models for predicting sentiments of airline tweets. The default models perform substantially well out of the box. However, you can fine-tune them on your specialized tasks to further enhance their performance.

In this article, I will show you how to fine-tune the OpenAI GPT-3 Turbo model for airline tweet classification. The fine-tuned model's performance will substantially increase compared to the default model. I would have loved to fine-tune GPT-4, but OpenAI currently does not support that.

By the end of this article, you will know how to fine-tune the GPT-3.5 Turbo model for text classification on your custom dataset. So, let's begin without ado.

Importing Required Libraries

The first step is to install the OpenAI Python library. If you haven't already, you must create an OpenAI account to retrieve your OpenAI API Key.

pip install openaiThe script below imports the required Python libraries into our application.

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from openai import OpenAI

import pandas as pd

import json

Importing the Dataset

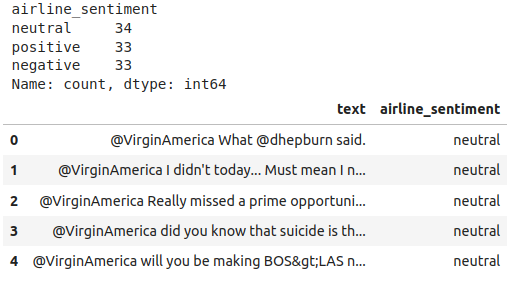

We will fine-tune our model using the Twitter US Airline Sentiment dataset. This will help us compare the performance of the fine-tuned GPT-3.5 Turbo with the default GPT-4o model we used in a previous article.

The script below imports the dataset. We also define the preprocess_data() function, which accepts a dataset and an index value as parameters. The function splits the dataset by sentiment category and returns 34, 33, and 33 tweets for each category, starting from the passed index. This way, we will get nearly 100 balanced records for fine-tuning.

dataset = pd.read_csv(r"/home/mani/Datasets/Tweets.csv")

def preprocess_data(dataset, n):

# Remove rows where 'airline_sentiment' or 'text' are NaN

dataset = dataset.dropna(subset=['airline_sentiment', 'text'])

# Remove rows where 'airline_sentiment' or 'text' are empty strings

dataset = dataset[(dataset['airline_sentiment'].str.strip() != '') & (dataset['text'].str.strip() != '')]

# Filter the DataFrame for each sentiment

neutral_df = dataset[dataset['airline_sentiment'] == 'neutral']

positive_df = dataset[dataset['airline_sentiment'] == 'positive']

negative_df = dataset[dataset['airline_sentiment'] == 'negative']

# Select records from Nth index

neutral_sample = neutral_df[n: n +34]

positive_sample = positive_df[n: n +33]

negative_sample = negative_df[n: n +33]

# Concatenate the samples into one DataFrame

dataset = pd.concat([neutral_sample, positive_sample, negative_sample])

# Reset index if needed

dataset.reset_index(drop=True, inplace=True)

dataset = dataset[["text", "airline_sentiment"]]

return dataset

filtered_data = preprocess_data(dataset, 0)

# print value counts

print(filtered_data["airline_sentiment"].value_counts())

filtered_data.head()

Output:

Converting Training Data to JSON Format for Fine-tuning

You need to convert the dataset into the JSON format as explained in the official documentation. I wrote a simple function that converts the input Pandas Dataframe to the required JSON format. It is important to notice that fine-tuning heavily depends upon the content for the system role, so be careful while specifying this value.

# JSON file path

json_file_path = '/home/mani/Datasets/airline_sentiments.json'

# Function to create the JSON structure for each row

def create_json_structure(row):

return {

"messages": [

{"role": "system", "content": "You are a Twitter sentiment analysis expert who can predict sentiment expressed in the tweets about an airline. You select sentiment value from positive, negative, or neutral."},

{"role": "user", "content": row['text']},

{"role": "assistant", "content": row['airline_sentiment']}

]

}

# Convert DataFrame to JSON structures

json_structures = filtered_data.apply(create_json_structure, axis=1).tolist()

# Write JSON structures to file, each on a new line

with open(json_file_path, 'w') as f:

for json_structure in json_structures:

f.write(json.dumps(json_structure) + '\n')

print(f"Data has been written to {json_file_path}")

Fine-tuning can be expensive compared to making inferences from an OpenAI model. You can check the estimated costs using the instructions on the official documentation.

Fine-Tuning GPT-3 Turbo for Text Classification

Now, we are ready to fine-tune our model on the training set. To do so, you need to upload your JSON file to the OpenAI server first.

Create an OpenAI client object and pass the file path to the files.create() method, as shown in the following script:

api_key = "YOUR_OPEN_AI_API_KEY" # replace this with your OpenAI API key"

client = OpenAI(api_key = api_key)

training_file = client.files.create(

file=open(json_file_path, "rb"),

purpose="fine-tune"

)

print(training_file.id)

Once the file is uploaded, you will receive a file ID, as the above script demonstrates. To start fine-tuning, you must call the fine_tuning.jobs.create() method and pass it the ID of the uploaded training file and the model name.

fine_tuning_job = client.fine_tuning.jobs.create(

training_file=training_file.id,

model="gpt-3.5-turbo"

)Running the above script will start fine-tuning. You can display various fine-tuning events using the following script.

# List up to 10 events from a fine-tuning job

print(client.fine_tuning.jobs.list_events(fine_tuning_job_id = fine_tuning_job.id,

limit=10))

Finally, once fine-tuning is completed, you will receive an email with the ID of your fine-tuned model, which you can use for inferences. You can also retrieve the ID of your fine-tuned model using the following script.

ft_model_id = client.fine_tuning.jobs.retrieve(fine_tuning_job.id).fine_tuned_modelText Classification with Fine-tuned GPT 3.5 Turbo Model

The rest of the process is similar to what you saw in the previous article. We will define the find_sentiment_ft_gpt() function that uses our fine-tuned model to predict the sentiment of the tweet passed as a parameter.

def find_sentiment_ft_gpt(tweet):

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

sentiment = client.chat.completions.create(

model= ft_model_id,

temperature = 0,

max_tokens = 10,

messages=[

{"role": "user", "content": content}

]

)

return sentiment.choices[0].message.content

Next, we will filter 100 test tweets from the dataset. These tweets will be different than the ones used for fine-tuning. We will iterate through all the test tweets and predict their sentiment using the find_sentiment_ft_gpt() function.

test_data = preprocess_data(dataset, 100)

all_sentiments = []

tweets_list = test_data["text"].tolist()

i = 0

exceptions = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

sentiment_value = find_sentiment_ft_gpt(tweet)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

print("Total exception count:", exceptions)

Finally, we can print the accuracy of the fine-tuned model using the following script.

accuracy = accuracy_score(all_sentiments, test_data["airline_sentiment"])

print("Accuracy:", accuracy)

Output:

Accuracy: 0.81

The above output shows that our fine-tuned model achieved an accuracy of 81% for text classification, which is even better than the GPT-4o and Llama 3, as you saw in the previous article.

Text Classification with Default GPT-3.5 Turbo Model

For comparison, let's make predictions using the default (not fine-tuned) GPT-3.5 Turbo model, as shown in the script below.

def find_sentiment_gpt(tweet):

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral. Return only the sentiment value in small letters.

tweet: {}""".format(tweet)

sentiment = client.chat.completions.create(

model= "gpt-3.5-turbo",

temperature = 0,

max_tokens = 10,

messages=[

{"role": "user", "content": content}

]

)

return sentiment.choices[0].message.content

all_sentiments = []

i = 0

exceptions = 0

while i < len(tweets_list):

try:

tweet = tweets_list[i]

sentiment_value = find_sentiment_gpt(tweet)

all_sentiments.append(sentiment_value)

i = i + 1

print(i, sentiment_value)

except Except as e:

print("===================")

print("Exception occured", e)

exception = exception + 1

accuracy = accuracy_score(all_sentiments, test_data["airline_sentiment"])

print("Accuracy:", accuracy)

Output:

Accuracy: 0.71The above output shows that the default model's performance was 10% less than the fine-tuned model.

Conclusion

Fine-tuning an LLM can result in a significant performance boost. In this article, we saw how to improve the performance of a default LLM using fine-tuning. For example, we fine-tuned the OpenAI GPT-3.5 Turbo model, resulting in a 10% performance gain compared to the default model and a 3% performance gain compared to GPT-4o and Llama 3.

It would be great to see how the fine-tuned GPT-4 model performs once OpenAI enables its fine-tuning. For now, I recommend fine-tuning GPT-3.5 Turbo on your custom dataset to achieve better results.