OpenAI and Anthropic are two AI giants delivering state-of-the-art large language models for various tasks. In a previous article, I compared OpenAI GPT-4o and Anthropic Claude 3.5 sonnet models for text classification tasks. That article was published almost a year ago. Since then, both OpenAI and Anthropic have released state-of-the-art models in o3 and Claude 4 opus, respectively.

In this article, I compare the performance of OpenAI o3 and Claude 4 opus for zero-shot text classification and summarization tasks.

So, let's begin without ado.

Installing and Importing Required Libraries

The following script installs OpenAI and Anthropic Python libraries along with the other modules required to run codes in this article.

!pip install anthropic

!pip install openai

!pip install rouge-score

!pip install --upgrade openpyxl

!pip install pandas openpyxlThe script below imports the required libraries.

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from itertools import combinations

from collections import Counter

from sklearn.metrics import hamming_loss, accuracy_score

from rouge_score import rouge_scorer

import anthropic

from openai import OpenAI

from google.colab import userdata

OPENAI_API_KEY = userdata.get('OPENAI_API_KEY')

ANTHROPIC_API_KEY = userdata.get('ANTHROPIC_API_KEY')Text Classification Comparison

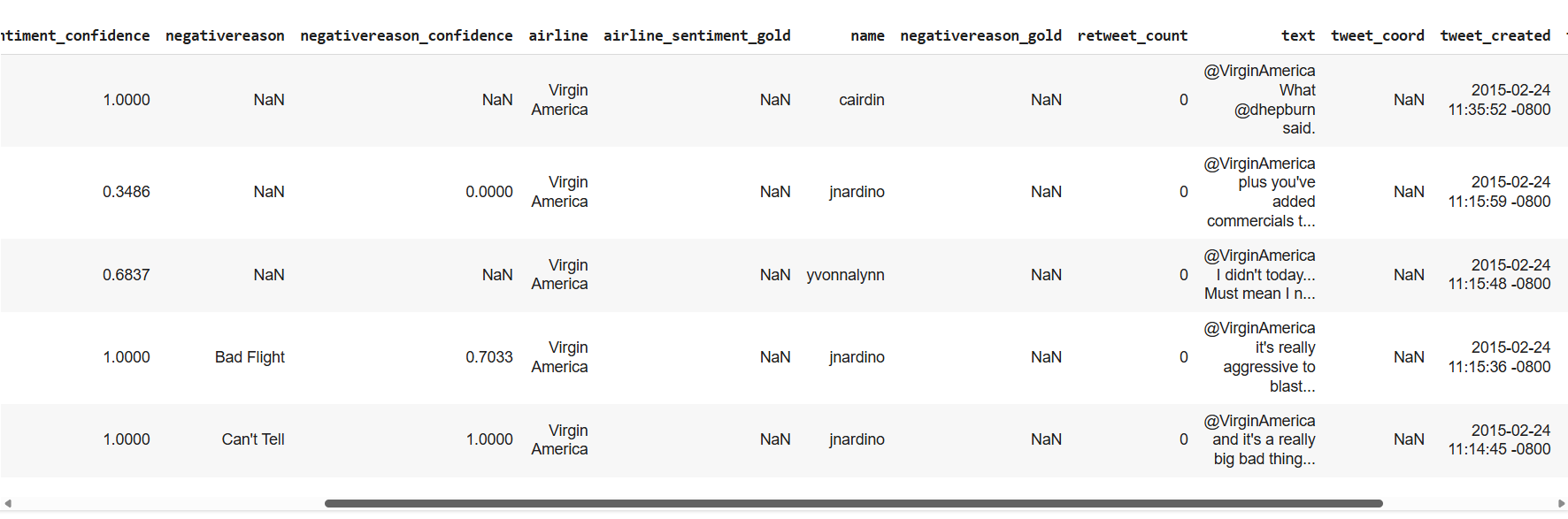

Let's first compare o3 and Claude 4 opus for text classification. We will predict the sentiment of tweets in the Twitter US Airline dataset from Kaggle.

The following script imports the dataset into a Pandas dataframe.

## Dataset download link

## https://www.kaggle.com/datasets/crowdflower/twitter-airline-sentiment?select=Tweets.csv

dataset = pd.read_csv(r"/content/Tweets.csv")

print(dataset.shape)

dataset.head()Output:

The text column contains a tweet's text, while the airline_sentiment column contains the corresponding sentiment value, which can be positive, negative, or neutral.

We will analyze the sentiments of 100 tweets, evenly divided into three categories: 33 positive, 33 negative, and 34 neutral tweets.

# Remove rows where 'airline_sentiment' or 'text' are NaN

dataset = dataset.dropna(subset=['airline_sentiment', 'text'])

# Remove rows where 'airline_sentiment' or 'text' are empty strings

dataset = dataset[(dataset['airline_sentiment'].str.strip() != '') & (dataset['text'].str.strip() != '')]

# Filter the DataFrame for each sentiment

neutral_df = dataset[dataset['airline_sentiment'] == 'neutral']

positive_df = dataset[dataset['airline_sentiment'] == 'positive']

negative_df = dataset[dataset['airline_sentiment'] == 'negative']

# Randomly sample records from each sentiment

neutral_sample = neutral_df.sample(n=34)

positive_sample = positive_df.sample(n=33)

negative_sample = negative_df.sample(n=33)

# Concatenate the samples into one DataFrame

dataset = pd.concat([neutral_sample, positive_sample, negative_sample])

# Reset index if needed

dataset.reset_index(drop=True, inplace=True)

# print value counts

print(dataset["airline_sentiment"].value_counts())

Output:

airline_sentiment

neutral 34

positive 33

negative 33

Name: count, dtype: int64Next, we define the make_prediction() function that accepts OpenAI or Anthropic client, the model ID, the content (input to the model), and the maximum number of output tokens.

Depending on the client and the model ID, the make_prediction model uses either the o3 or the Claude 4 opus model for generating the model response.

def make_prediction(client, model, content, max_tokens):

if model == "o3":

response = client.chat.completions.create(

model= "gpt-4",

temperature = 0,

max_tokens = max_tokens,

messages=[

{"role": "user", "content": content}

]

)

response_value = response.choices[0].message.content

if model == "claude-opus-4-0":

response = client.messages.create(

model= model,

max_tokens = max_tokens,

temperature=0.0,

messages=[

{"role": "user", "content": content}

]

)

response_value = response.content[0].text

return response_valueWe will define the classify_tweets() function that accepts the client and model names along with the dataset and maximum number of output tokens. The function iterates through all the tweets in the dataset, embeds them in the content, and sends them to the make_prediction() function for classification.

The prediction for all the tweets is stored in the all_sentiments list, which is returned to the calling function.

def classify_tweets(client, model, dataset, max_tokens):

all_sentiments = []

tweets_list = dataset["text"].tolist()

message = False

exceptions = 0

for tweet in tweets_list:

content = """What is the sentiment expressed in the following tweet about an airline?

Select sentiment value from positive, negative, or neutral.

Return only the sentiment value in small letters e.g. positive, negative, or neutral in the output.

Here is the tweet: {}""".format(tweet)

sentiment_value = make_prediction(client, model, content, max_tokens)

print(sentiment_value)

all_sentiments.append(sentiment_value)

return all_sentiments

Text Classification with OpenAI o3

Let's first predict tweet sentiments using the OpenAI o3 model.

%%time

client = OpenAI(api_key = OPENAI_API_KEY,)

model = "o3"

max_tokens = 10

predictions = classify_tweets(client, model, dataset, max_tokens)

accuracy = accuracy_score(predictions, dataset["airline_sentiment"])

print("Accuracy:", accuracy)Output:

Accuracy: 0.87

CPU times: user 897 ms, sys: 99.6 ms, total: 996 ms

Wall time: 1min 4sThe above output shows we achieved an accuracy of 87%. In addition, 100 tweets are processed in 1 minute and 4 seconds.

Text Classification with Claude 4 Opus

Next, we will classify tweets using the Claude 4 opus.

%%time

client = anthropic.Anthropic(api_key = ANTHROPIC_API_KEY)

model = "claude-opus-4-0"

predictions = classify_tweets(client, model, dataset, max_tokens)

accuracy = accuracy_score(predictions, dataset["airline_sentiment"])

print("Accuracy:", accuracy)

Output:

Accuracy: 0.79

CPU times: user 2.02 s, sys: 279 ms, total: 2.3 s

Wall time: 5min 6sWe achieved an accuracy of 79%, with a processing time of 5 minutes and 6 seconds. The OpenAI o3 model is a clear winner here.

Text Summarization Comparison

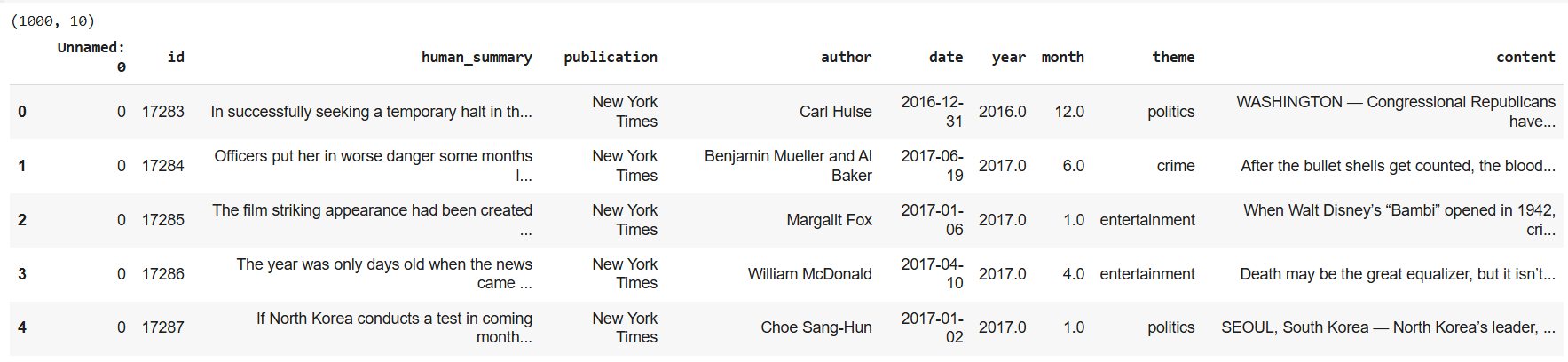

For comparing text summarization performance, we compare articles in the News Article dataset from GitHub.

# https://github.com/reddzzz/DataScience_FP/blob/main/dataset.xlsx

dataset = pd.read_excel(r"/content/summary_datasets.xlsx")

print(dataset.shape)

dataset.head()Output:

The content and human_summary columns contain article texts and corresponding human-generated summaries, respectively.

To evaluate text summarization performance, we will use the ROUGE metric.

The following script defines the calculate_rouge() function that calculates ROUGE score for the predicted and target summaries.

def calculate_rouge(reference, candidate):

scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True)

scores = scorer.score(reference, candidate)

return {key: value.fmeasure for key, value in scores.items()}Finally, we define the summarize_articles() function, which takes the client object, the model ID, the dataset, and the maximum number of output tokens. The function iterates through the first 10 records in the dataset and calls the make_prediction() function to generate summaries using o3 and Claude 4 opus models.

def summarize_articles(client, model_id, dataset, max_tokens):

results = []

for i, (_, row) in enumerate(dataset[:10].iterrows(), start=1):

article = row['content']

human_summary = row['human_summary']

print(f"Summarizing article {i}.")

content = f"Summarize the following article in 1150 characters. The summary should look like human created:\n\n{article}\n\nSummary:"

generated_summary = make_prediction(client, model, content, max_tokens)

rouge_scores = calculate_rouge(human_summary, generated_summary)

results.append({

'article_id': row.id,

'generated_summary': generated_summary,

'rouge1': rouge_scores['rouge1'],

'rouge2': rouge_scores['rouge2'],

'rougeL': rouge_scores['rougeL']

})

return resultsText Summarization with OpenAI o3

We first call the summarize_articles() function using the OpenAI client and the o3 model.

%%time

client = OpenAI(api_key = OPENAI_API_KEY,)

model = "o3"

max_tokens = 1150

results = summarize_articles(client, model, dataset, max_tokens)

results_df = pd.DataFrame(results)

mean_values = results_df[["rouge1", "rouge2", "rougeL"]].mean()

print(mean_values)

Output:

rouge1 0.351287

rouge2 0.115566

rougeL 0.179441

dtype: float64

CPU times: user 668 ms, sys: 59.4 ms, total: 727 ms

Wall time: 1min 21sText Summarization with Claude 4 Opus

Next, we call the summarize_articles() function using the claude 4 opus model.

%%time

client = anthropic.Anthropic(api_key = ANTHROPIC_API_KEY)

model = "claude-opus-4-0"

results = summarize_articles(client, model, dataset, max_tokens)

results_df = pd.DataFrame(results)

mean_values = results_df[["rouge1", "rouge2", "rougeL"]].mean()

print(mean_values)Output:

rouge1 0.341579

rouge2 0.067680

rougeL 0.141958

dtype: float64

CPU times: user 974 ms, sys: 115 ms, total: 1.09 s

Wall time: 2min 12sThe text summarization outputs for the o3 and Claude 4 models show that OpenAI performs better on all three ROUGE metrics. In addition, it takes much less time to process records.

Conclusion

The results from this article show that OpenAI o3 is significantly better than Claude 4 Opus for text classification and summarization tasks. Furthermore, the OpenAI API has significantly lower latency compared to the Anthropic API.

Let me know what you think of these results, and feel free to share if you have any benchmarks on the performance of these models.